AI Bubble Won't Just Take Down the Stock Market - It Could Hammer the US Economy, Too

The US economy in 2025 stands at a critical juncture, with artificial intelligence and data center investment driving an unprecedented concentration of economic growth that masks fragility beneath the surface. Harvard economist Jason Furman's analysis reveals a sobering reality: AI-related capital expenditures accounted for approximately 92% of US GDP growth in the first half of 2025, while information processing equipment and software constituted only 4% of total GDP yet generated nearly all economic expansion. This extreme dependency on a single sector, combined with historical parallels to previous technological bubbles, deteriorating valuation fundamentals despite stronger earnings, massive debt accumulation among technology firms, and emerging infrastructure constraints, creates a scenario where a correction in AI asset values would not merely deflate financial markets but would transmit devastating shocks through the real economy via multiple channels: consumer spending collapse, corporate investment freezes, employment losses, and potential financial system stress.[1][2]

H1 2025 US GDP Growth Composition: AI Investment as the Dominant Driver

The Artificial Intelligence Boom as the Engine of Growth

Between January and June 2025, US economic growth became almost entirely dependent on technology infrastructure investment, with data center construction, semiconductor purchases, and cloud capacity expansion serving as the primary growth driver. This investment wave extended far beyond technology companies themselves, construction employment surged as firms broke ground on massive new facilities, utilities experienced record demand from power-intensive operations, and financial services benefited from the demand for capital to fund this buildout. In August 2025, Renaissance Macro Research estimated that the dollar value contributed to GDP growth by AI data center construction surpassed US consumer spending for the first time in economic history, a remarkable feat considering that consumer expenditure typically accounts for roughly two-thirds of GDP.[1][3]

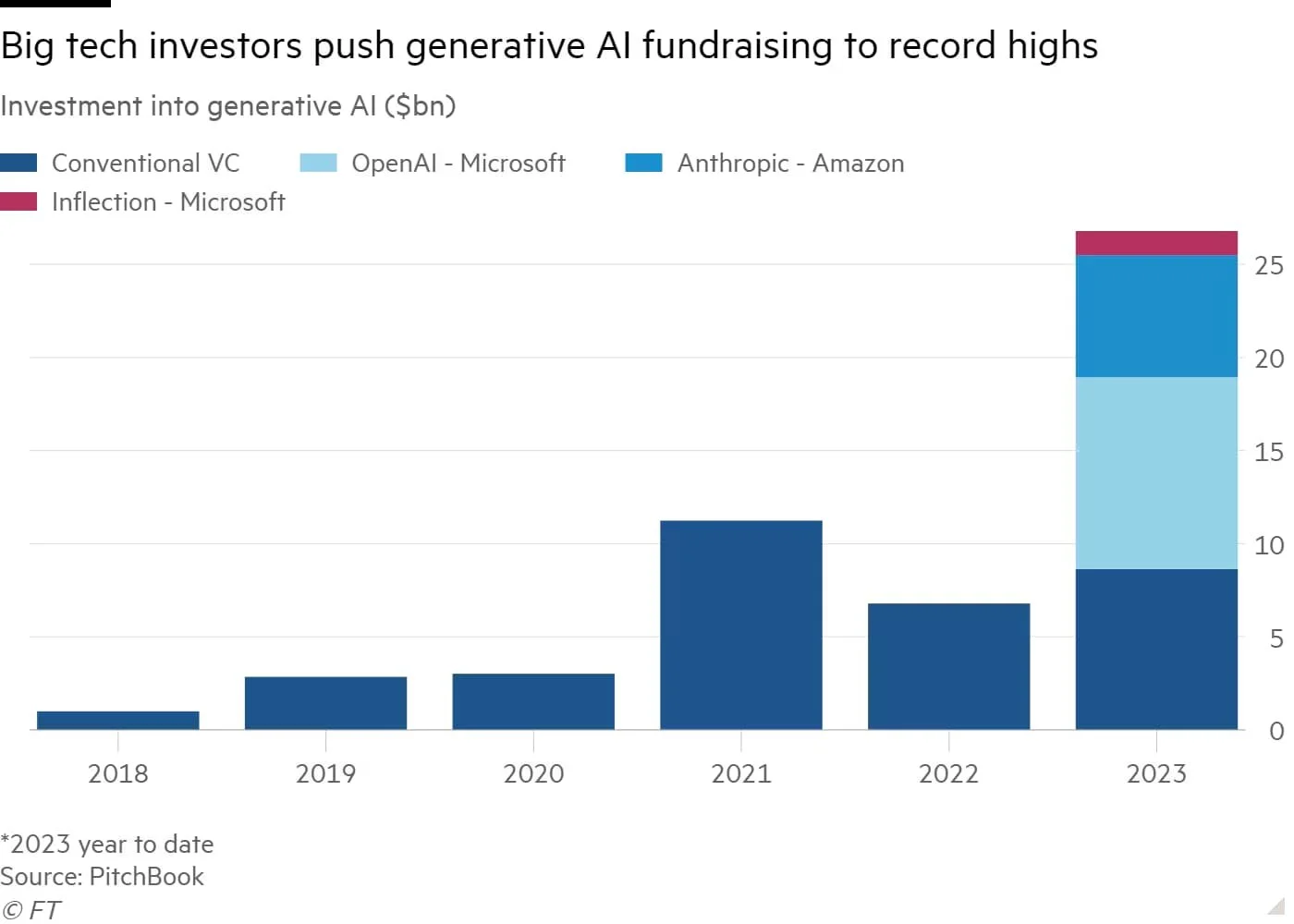

The scale of this commitment is genuinely transformative. Hyperscalers including Microsoft, Amazon, Meta, Alphabet, and Oracle collectively planned to deploy approximately $370 billion in capital expenditures in 2025 alone, representing a 62% increase from 2024's $230 billion and dwarfing most other industrial investment waves in modern history. Microsoft reported quarterly capex of $34.9 billion in the first quarter of fiscal 2025, up from $24.2 billion just one quarter prior, while Meta raised its annual spending guidance to $70-72 billion, nearly doubling from $37.3 billion in 2024. This capital deployment extends throughout the economy - power generation facilities, transmission infrastructure, construction labor, semiconductor manufacturing, and financial services all benefited from the upstream demand generated by hyperscaler investment.[3][4]

Hyperscaler Capital Spending: $370 Billion Annual AI Infrastructure Investment

Yet this economic growth engine rests on an untested premise: that massive expenditures on AI infrastructure will generate commensurate returns through productivity enhancements and new revenue streams. According to MIT's 2025 research on enterprise AI adoption, approximately 95% of organizations implementing generative AI are seeing zero measurable return on investment within six months of pilot program completion. While advocates argue that traditional ROI metrics misapply industrial-era measurement to transformational cognitive technology, the underlying concern persists, companies are making unprecedented capital commitments based on projected productivity gains that have not yet materialized at scale. The MIT study found that despite $30-40 billion in enterprise investment in generative AI systems, the vast majority of implementations are stalled in pilots or have failed to demonstrate measurable business impact. This measurement challenge extends to the macroeconomic level, where policymakers struggle to distinguish between genuine productivity-enhancing investment and speculative asset inflation.[5]

Stock Market Gains Concentrated in AI Leaders

The concentration of market gains in a narrow group of technology stocks represents one of the defining characteristics of the current boom and a critical vulnerability to systemic risk. The "Magnificent Seven" companies - Apple, Amazon, Alphabet, Broadcom, Meta, Microsoft, Nvidia, Oracle, Palantir, and Tesla, now constitute 31.5% of the S&P 500's total market capitalization, up from just 12% in 2015, a concentration level comparable to the late stages of the dot-com bubble. More striking still, just three companies - Nvidia, Microsoft, and Apple, collectively represent nearly 21% of the index, with Nvidia alone constituting over 8% at its peak valuation of $5 trillion.[6][7][8]

S&P 500 Market Concentration: The Magnificent Seven Dominates (October 2025)

This concentration dynamic has created a self-reinforcing feedback loop that amplifies both the gains during the rally and the risks during a correction. Rising stock valuations have enabled these dominant firms to access capital at favorable rates, fueling further investment, which generates more positive earnings forecasts, which drive additional stock appreciation. In year-to-date 2025 performance through October, the Magnificent Seven delivered returns ranging from 25% (Apple) to 138% (Nvidia), more than double the broader S&P 500's 15% return. Wall Street analysts acknowledge this dynamic explicitly, as DataTrek Research noted, "We can think of no historical precedent for a handful of already sizable companies continuing to grow much faster than the rest of the S&P 500".[7][9]

The market concentration risk is amplified by the absence of fundamental economic precedent for this valuation structure. Excluding Tesla, the Magnificent Seven trades at an average of 30 times forward 12-month earnings, representing a 34% premium to the S&P 500's 22.4x multiple. While these forward multiples appear more reasonable than the 60x ratios seen at the dot-com peak, they mask a more insidious concentration where narrow market leadership has become self-reinforcing through momentum, index tracking, and algorithmic correlation. Every dollar of passive index fund inflows automatically increases the weight of the Magnificent Seven, creating a gravity well for equity capital.[9]

Circular AI Investment Patterns Raise Concerns

One of the most troubling aspects of the AI boom is the emergence of circular financing patterns that substitute internal capital recycling for external demand validation. The investment flows create closed loops where capital circulates within the AI ecosystem without clear evidence of genuine productivity validation from end-user adoption. Nvidia funds OpenAI, which then contracts with Oracle for $300 billion in cloud capacity, and Oracle gains valuation on those same deals. CoreWeave sells capacity guarantees to Nvidia, and Nvidia guarantees to repurchase unsold capacity. This network of financial relationships obscures true demand signals and creates concentration risk where the failure of one participant could trigger cascading defaults through network effects.[10][11][12]

The debt financing of these investments amplifies this circular risk. Private credit firms including Blue Owl, Apollo, KKR, and Brookfield are now core participants in data center asset finance, with Blue Owl partnering with Meta on a $27 billion synthetic lease structure that keeps debt off Meta's balance sheet while maintaining effective control and usage rights. These financial engineering structures allow firms to expand aggressively while maintaining the appearance of moderate leverage, but they introduce counterparty risk and the potential for rapid repricing if underlying assumptions prove incorrect.[12]

Market Dependency on Continuing AI Capex

Strategy houses including Wells Fargo have explicitly anchored their equity market forecasts to the continuation of accelerating AI capital expenditure. Wells Fargo projects the S&P 500 to reach 7,200 by 2026, a level that requires sustained technology sector outperformance and uninterrupted capex growth. This conditional forecast reveals the structural dependency: if AI capex continues at current rates or accelerates further, valuations remain justified; if capex moderates or deteriorates, the economic foundation underlying current market levels erodes rapidly.[4]

This dependency becomes particularly concerning when viewed through the lens of corporate financial conditions. Technology company capex budgets are not infinitely elastic—they respond to perceived returns, funding constraints, and competitive dynamics. If the rate of AI infrastructure investment slows even modestly, it would remove the primary driver of recent GDP growth, likely triggering downward earnings revisions and multiple contraction simultaneously.

How a Burst Could Hit the Real Economy

A sharp correction in AI-related stocks would transmit powerful shocks to the real economy through multiple channels, with the initial impact flowing through the wealth effect on consumer spending. High-income households with substantial equity holdings have driven much of recent consumer spending growth, emboldened by rising portfolio valuations from the technology rally. Research from Moody's chief economist Mark Zandi indicates that "the spending surge is largely coming from affluent households with high incomes and substantial net worth, who are witnessing an increase in their stock portfolios, resulting in a greater sense of financial well-being and increased spending".[13]

Academic research quantifies this relationship: for every dollar of increased stock market wealth, consumer spending rises by approximately 2.8 cents per year. Applied to current conditions, if the Magnificent Seven's combined $25 trillion in market value were to experience a 30% correction, a decline that would still leave valuations higher than mid-2024 levels - the wealth destruction would exceed $7.5 trillion. Using the empirical wealth effect coefficient, this would reduce annual consumer spending by roughly $210 billion, eliminating nearly 1% from GDP growth and potentially pushing the economy into contraction given that AI capex cannot simultaneously fill this consumer spending void.[14]

The consumer spending channel is particularly vulnerable because it represents discretionary purchasing concentrated among affluent households with the highest sensitivity to portfolio losses. As luxury markets are already experiencing strain, with Interbrand reporting a 5% drop in personal luxury brand valuations in 2025 despite overall global brand value growth of 4.4%, further equity declines would likely amplify this weakness. A 30% correction in technology equities would immediately reduce purchasing power for luxury goods, travel, dining, and other services that have been growing while manufacturing has remained weak.[15]

The Investment Pipeline and Corporate Capex Shock

The second transmission channel flows through corporate investment freezes. Large firms across the economy have already committed to substantial data center expansions, chip purchases, and cloud infrastructure orders based on forecasted returns that are largely unproven. If earnings revisions turn negative for technology companies, even if triggered by multiple compression rather than fundamental deterioration, the business case for new capex projects weakens immediately. IT equipment orders have led manufacturing growth in 2025, and a deceleration in these orders would cascade through the industrial economy.

Manufacturing employment, which has been relatively stable despite weakness in other sectors, is particularly exposed to this dynamic. Investment in computers and related equipment rose 41% year-over-year in 2025, driving much of the strength in industrial production. A reversal of this capex momentum would translate directly into factory employment losses, particularly in semiconductor-intensive regions where regional economies have become concentrated around data center supply chains.[4]

The corporate debt dimension amplifies this risk. With $141 billion in AI-related debt issuance already recorded in 2025, surpassing the entire $127 billion from 2024, many of these capital projects are financed by debt that carries covenants and margin requirements. Should data center projects generate lower-than-expected returns or face demand downgrades, firms could face credit rating pressure and rising refinancing costs, creating negative feedback loops where deteriorating credit conditions force reductions in capex to preserve balance sheet metrics.[12][16]

Historical Parallels: The Dot-Com Caution

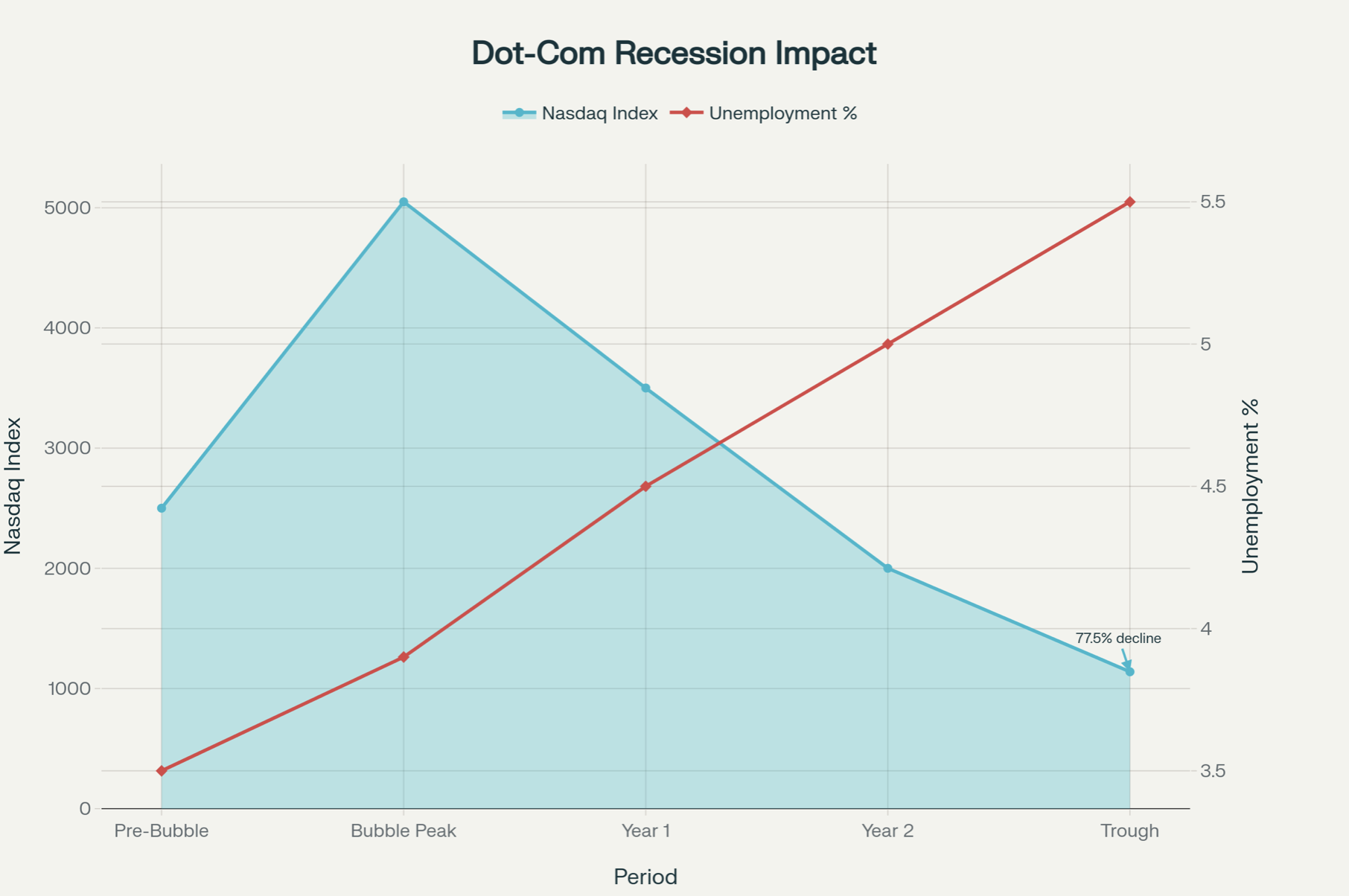

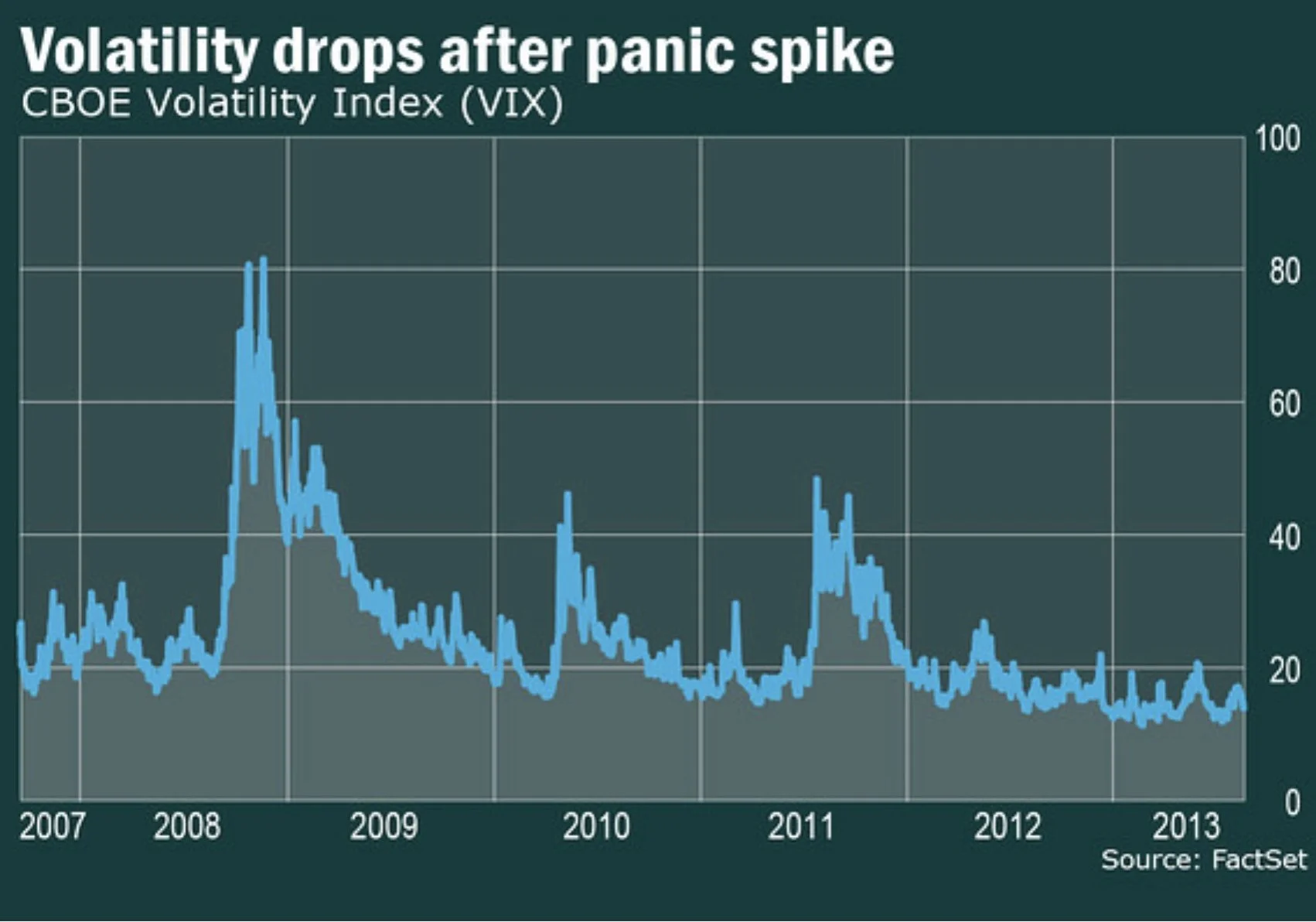

The 2001 dot-com recession provides a cautionary historical reference point, though current circumstances differ in material respects. When the Nasdaq composite peaked at 5,048.62 on March 10, 2000, it subsequently crashed 77.5% to 1,139 by October 2002, a loss that erased virtually all gains accumulated during the prior seven years. The broader economy contracted by 0.3% GDP in Q3 2001, unemployment rose from 3.9% to 5.5%, and nonfarm payroll employment fell 762,000, with manufacturing accounting for 1 million of those losses. The 2001-2003 recession is often described as a "jobless recovery" because employment growth lagged economic recovery by multiple years—the economy took 46 months to regain peak employment after the 2001 peak, compared to 21 months on average for post-WWII recessions.[17][18][19][20]

Dot-Com Bust (2000-2002): 77.5% Market Crash and Rising Unemployment

The key difference between that episode and the current environment is that today's technology companies remain substantially profitable and possess diversified business models beyond speculative capacity. Nvidia, Microsoft, and Alphabet generate genuine operating cash flows, unlike many dot-com era ventures that operated at structural losses. However, this strength is precisely why the current bubble carries additional systemic risk, the scale of these companies means they are no longer peripheral to the broader economy but rather central to financial stability through their pervasive presence in asset portfolios, their commanding influence over power and manufacturing capacity, and their critical role in reshaping capital allocation across the entire corporate sector.

Broader Systemic Vulnerabilities

Beyond the direct consumption and investment channels, several emerging systemic vulnerabilities threaten to amplify an AI bubble correction into broader financial instability. The first involves financial system leverage disguised through complex financial structures. Banks and private credit funds now hold substantial exposure to AI infrastructure projects, either directly through project finance arrangements or indirectly through investments in firms like CoreWeave that specialize in data center ownership. The Bank of England specifically warned that if forecasted debt-financed AI infrastructure growth materializes, the potential financial stability consequences become pronounced, particularly given leverage and liquidity mismatch that could amplify shocks.[21][12]

The second vulnerability involves commodity markets, particularly electricity and copper. Data centers require massive power commitments, every megawatt of AI data center capacity requires 20-40 tonnes of copper for wiring and infrastructure. Should AI capex suddenly moderate, demand destruction in power and materials markets could trigger commodity price declines that create margin calls in commodity derivatives markets, potentially cascading through systematically important financial institutions as occurred with nickel futures in 2022. Electricity prices have already risen sharply in data center-intensive regions; wholesale electricity costs in Virginia have risen as much as 267% relative to five years earlier due to data center concentration.[22][21]

The third vulnerability involves the Federal Reserve's limited policy flexibility. With inflation remaining "somewhat elevated" according to the Fed's October 2025 statement, policymakers may find it difficult to cut rates aggressively in response to an AI asset price correction, limiting their traditional tool for supporting asset prices and credit conditions during crises. The Fed has explicitly noted that "uncertainty about the economic outlook remains elevated" and "downside risks to employment rose in recent months," suggesting policymakers are already concerned about economic momentum. In a severe correction scenario where unemployment rises meaningfully, the Fed would face the challenging task of cutting rates while inflation remains above target.[23]

The Valuation Paradox and Measurement Challenges

A nuanced observation emerges from comparing contemporary AI bubble metrics to the dot-com precedent: while some aggregate valuation measures suggest moderate valuations, others reveal unprecedented excess. The forward P/E ratio on the Nasdaq sits at 26.4x in late 2025, substantially below the 60.1x reached in March 2000, suggesting that earnings growth rather than pure speculation justifies much of current valuations. Similarly, the median price-to-sales ratio for technology companies has declined from 32.44x in March 2000 to approximately 8.5x in 2025, and median price-to-book ratios have collapsed from 13.85x to 3.5x.[8][24]

Yet this apparent moderation in traditional metrics masks a more concerning reality reflected in alternative valuation measures. The ratio of total US equity market value to GDP has reached 360%, the highest level ever recorded, compared to 140% at the dot-com peak and 80% in pre-2000 conditions. The concentration of market gains in just seven stocks has also increased, these companies now represent 31.5% of the S&P 500 compared to 25-30% of the Nasdaq in the late 1990s. Most troublingly, the level of return on investment for massive AI capex remains unproven and disputed, with 95% of enterprise implementations showing zero measurable financial return despite massive infrastructure investments.[21][7][5][8]

This measurement challenge extends to productivity estimates. While some studies suggest generative AI can boost productivity by 15-50% in professional services and healthcare, the distinction between efficiency gains and actual economic value creation remains contested. A marketing team that reduces content creation time from hours to minutes experiences genuine efficiency improvement, but this does not automatically translate to revenue growth or cost savings, competitors benefit from identical tools, and customers do not necessarily pay more for identical services delivered faster. The failure of most enterprises to convert AI tool access into measurable ROI after six months suggests that the productivity gains may be smaller, later-arriving, or more difficult to monetize than the infrastructure investment would suggest.[25][26][5]

Policy and Market Implications

Policymakers face the difficult challenge of distinguishing between productive AI innovation and speculative excess without the benefit of historical precedent or clear metrics. The Federal Reserve has explicitly acknowledged that "uncertainty about the economic outlook remains elevated," and policymakers are "attentive to the risks to both sides of its dual mandate". Yet the tools available to address a tech-driven bubble correction are limited by inflation constraints, cutting rates aggressively to support asset prices would risk re-igniting inflation expectations precisely when underlying labor market weakness would otherwise justify easing.[23]

Congress faces parallel challenges regarding tax and regulatory policy. The recent passage of full upfront expensing for capital investments has created $55 billion in combined tax savings for major technology firms in 2025 alone, effectively subsidizing the AI capex boom through foregone revenues. Policymakers must assess whether this represents an appropriate industrial policy supporting crucial technology infrastructure or whether it is simply amplifying speculative excess during a bubble phase.[12]

The most likely policy response path involves policymakers allowing an AI bubble correction to occur gradually rather than intervening aggressively, hoping that incremental multiple compression combined with genuine earnings growth preserves employment levels throughout the process. However, if a correction occurs rapidly, triggered by disappointing productivity data, debt rating downgrades cascading through the financial system, or a geopolitical shock that disrupts supply chains for semiconductors, the Fed's policy response capacity may prove insufficient to prevent broader economic deterioration.

Conclusion: A Fragile Foundation

The US economy's remarkable resilience in 2025 rests on an extremely narrow foundation: the willingness of a handful of mega-cap technology firms to invest $370 billion annually in AI infrastructure, financed increasingly through debt rather than internal cash flows, in pursuit of productivity gains that have not yet materialized at commercial scale. This buildout has accounted for essentially all of US GDP growth, driven a historic concentration of market gains into a narrow group of stocks, and created systemic vulnerabilities through financial leverage, commodity exposure, and policy constraints that limit the Fed's response capacity.

While AI represents a genuinely transformative technology with long-term economic potential, the current investment boom appears to embody a classic bubble structure: accelerating capital flows into an emerging technology domain, concentration of gains in a few dominant firms, circular financing patterns that substitute capital recycling for fundamental demand validation, leverage expansion to fund growth expectations, and a disconnect between valuation levels and demonstrated economic returns. The vulnerability is not that AI itself is overvalued as a technology, but rather that the current financial structure, concentrated market valuations, debt-financed capex, unproven productivity returns, and narrow GDP growth drivers, really creates multiple channels through which a correction in AI asset values would transmit directly into real economic contraction.

Should technology stock valuations compress by 30% as part of a normal market correction, the wealth destruction would immediately reduce high-income consumer spending by an estimated $210 billion annually through the empirical wealth effect. Corporate capex freezes would follow as projects financed on the basis of projected AI productivity returns face revised financial cases. Manufacturing employment would contract as IT equipment orders decelerate. Technology sector debt would face refinancing pressure. Utilities would confront stranded investments in power infrastructure constructed specifically to serve data centers. The cascade would likely result in a recession with significant unemployment consequences, following the historical precedent of the 2001 dot-com recession where the jobless recovery phase lasted 46 months.

The most prudent policy path involves policymakers managing a gradual correction in AI-related valuations while maintaining supportive conditions for underlying technology innovation & larger focus on internal (market) dynamics, which means creating a stronger internal economic foundation which can bring in real increase of productivity. Subsidies for productive infrastructure investment should continue, but policymakers should remain vigilant to distinguish genuine productivity improvements from financial engineering and speculative excess. For investors, the current environment warrants substantial skepticism toward technology valuations despite genuine earnings strength, particularly for firms whose growth depends on continued acceleration of capex investment and debt issuance in pursuit of productivity gains that remain largely hypothetical at scale.

The Quiet Bank Run: How Stablecoins Could Pull $1 Trillion From Emerging‑Market Banks

By Yipeng (Dylan) Liu — The Beryl Consulting Group

Executive Summary

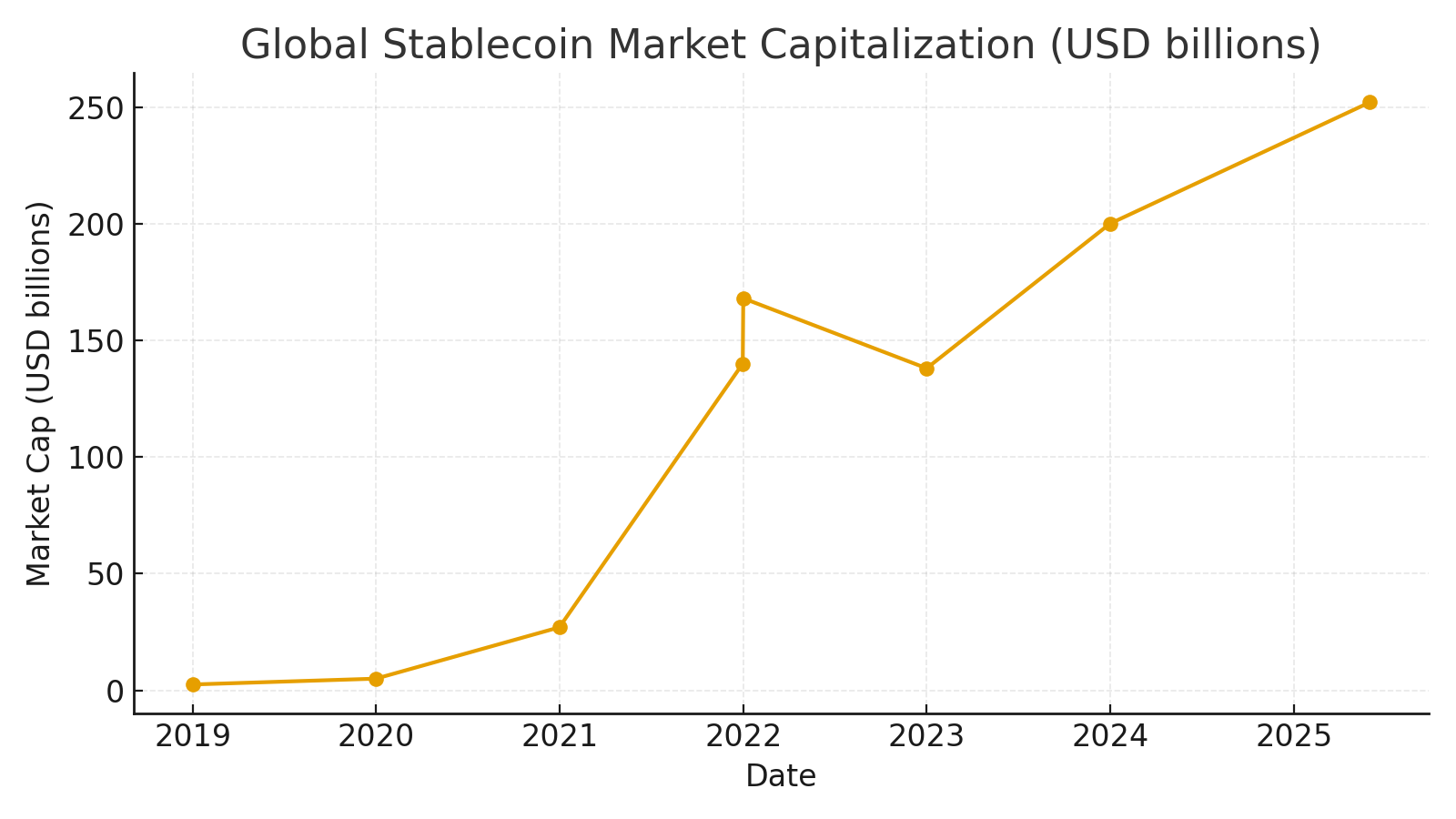

During last week’s digital assets symposium that Beryl analysts attended, it was stated that USD-pegged stablecoins could drain roughly $1T in deposits from emerging-market (EM) banks over the next three years. This is not due to a crisis or sanctions. It’s a funding leak: local deposits migrate to tokenized dollars whose reserves sit in U.S. T-bills. In markets where the utility function is “return of capital > return on capital,” a dollar on your phone beats a deposit in a depreciating currency. In countries where currencies crumble every few years, a dollar token on your phone feels safer than a balance in your local bank. The macro story is a gradual rerouting of savings away from local banking balance sheets and toward short-duration U.S. T-bills—changing who finances whom.

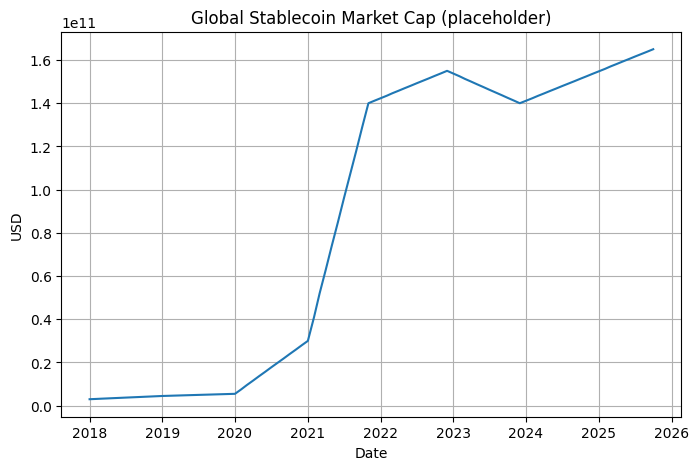

Market Structure and Scaling

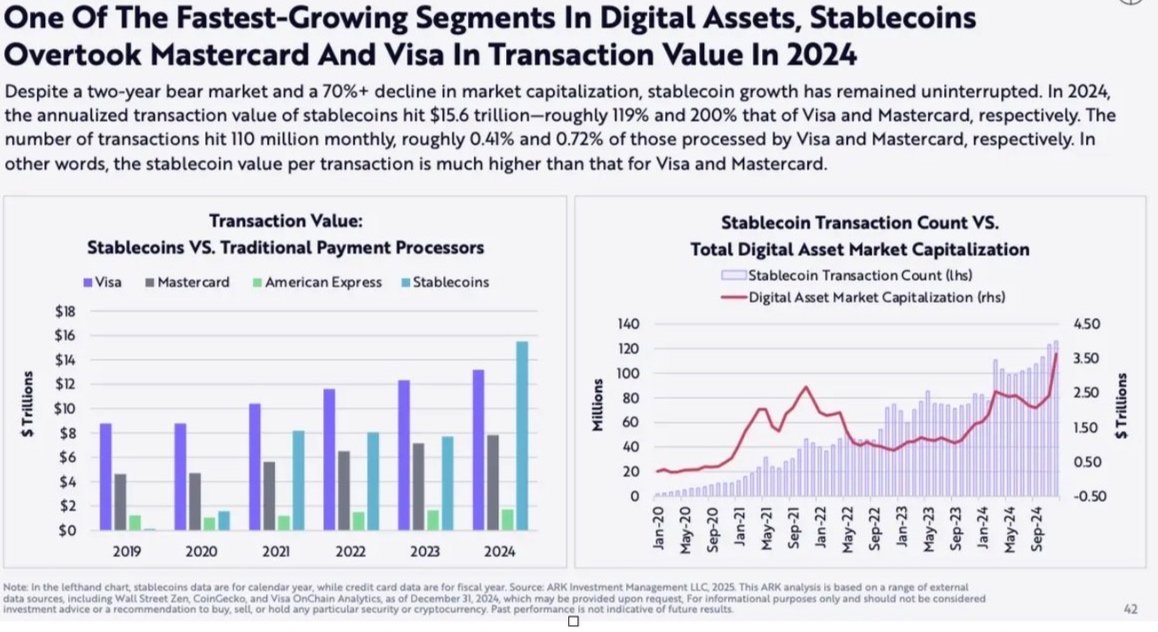

Global stablecoin capitalization has rebounded to ~USD 250B+ as of mid‑2025 from ~USD 27B at end‑2020, even after a 2022 deleveraging dip. That trajectory points to payments and treasury‑like use cases, not just speculation.

What stablecoins are today. Functionally, they are borderless USD accounts with API access and 24/7 settlement. They are not a DeFi novelty anymore; they are a payments and savings layer that clears at internet speed.

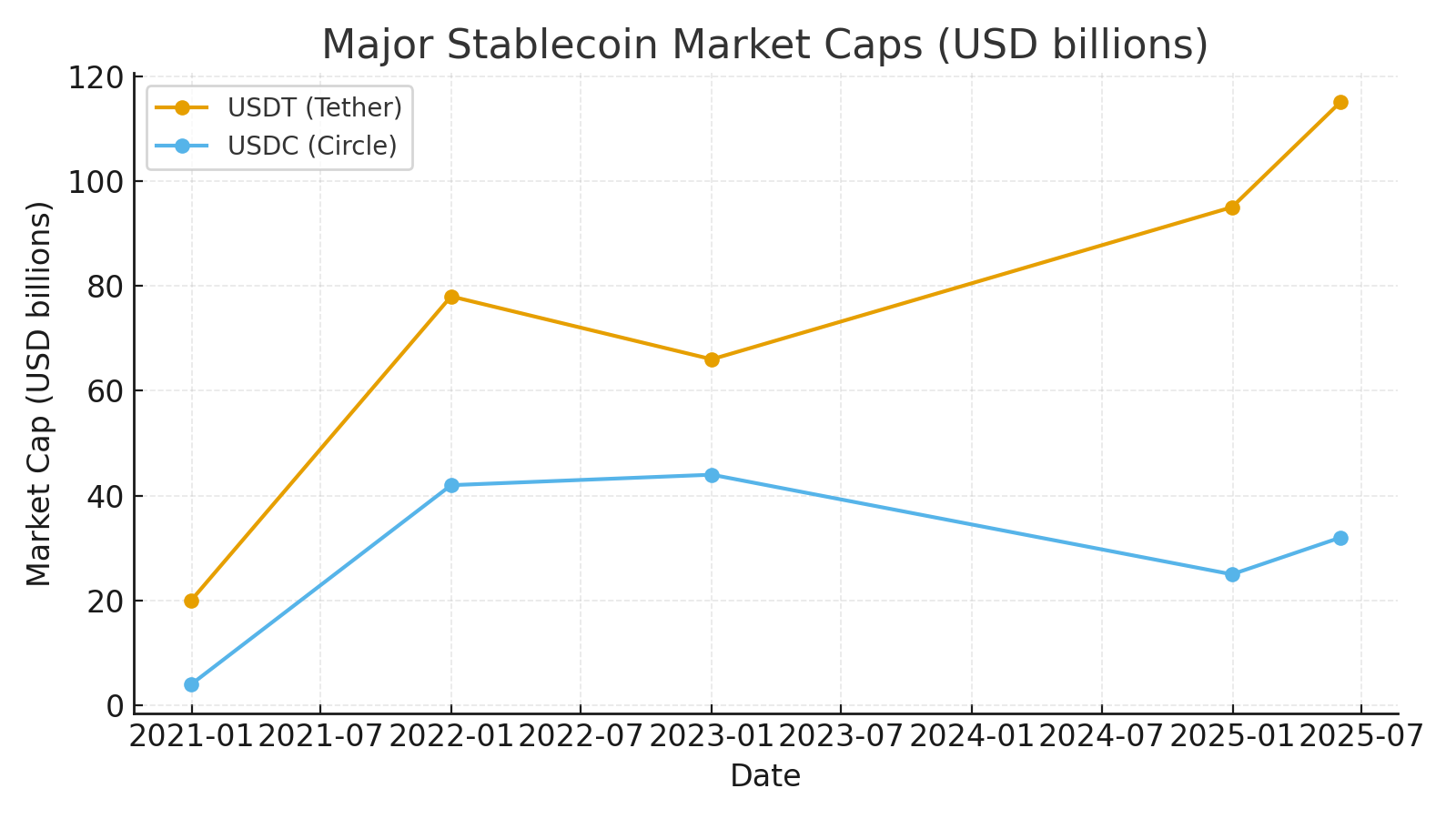

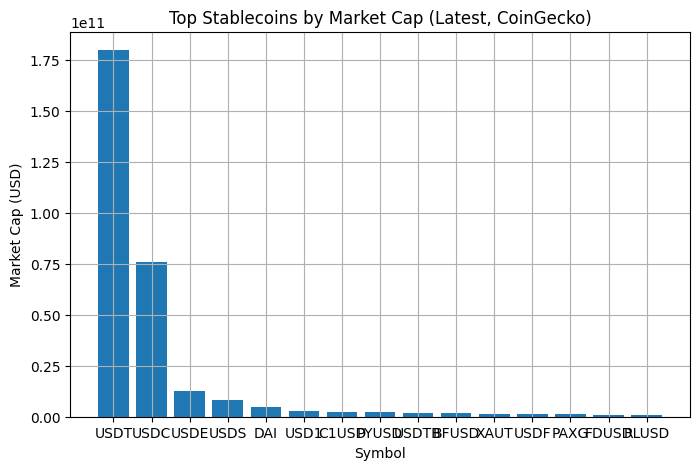

Who the issuers are. Supply is concentrated (USDT, USDC, a few smaller regulated players). Reserve disclosures show a high share of T-bills, cash, and overnight facilities; credit and duration risk are intentionally minimal. The business model is “float into T-bills.”

How big the base is. Global capitalization has grown from low tens of billions pre-2021 to hundreds of billions now. The key insight is not the headline cap; it’s velocity and geography: a large share of on-chain value in lower-income markets clears in stablecoins, not native tokens, because dollar utility dominates speculation.

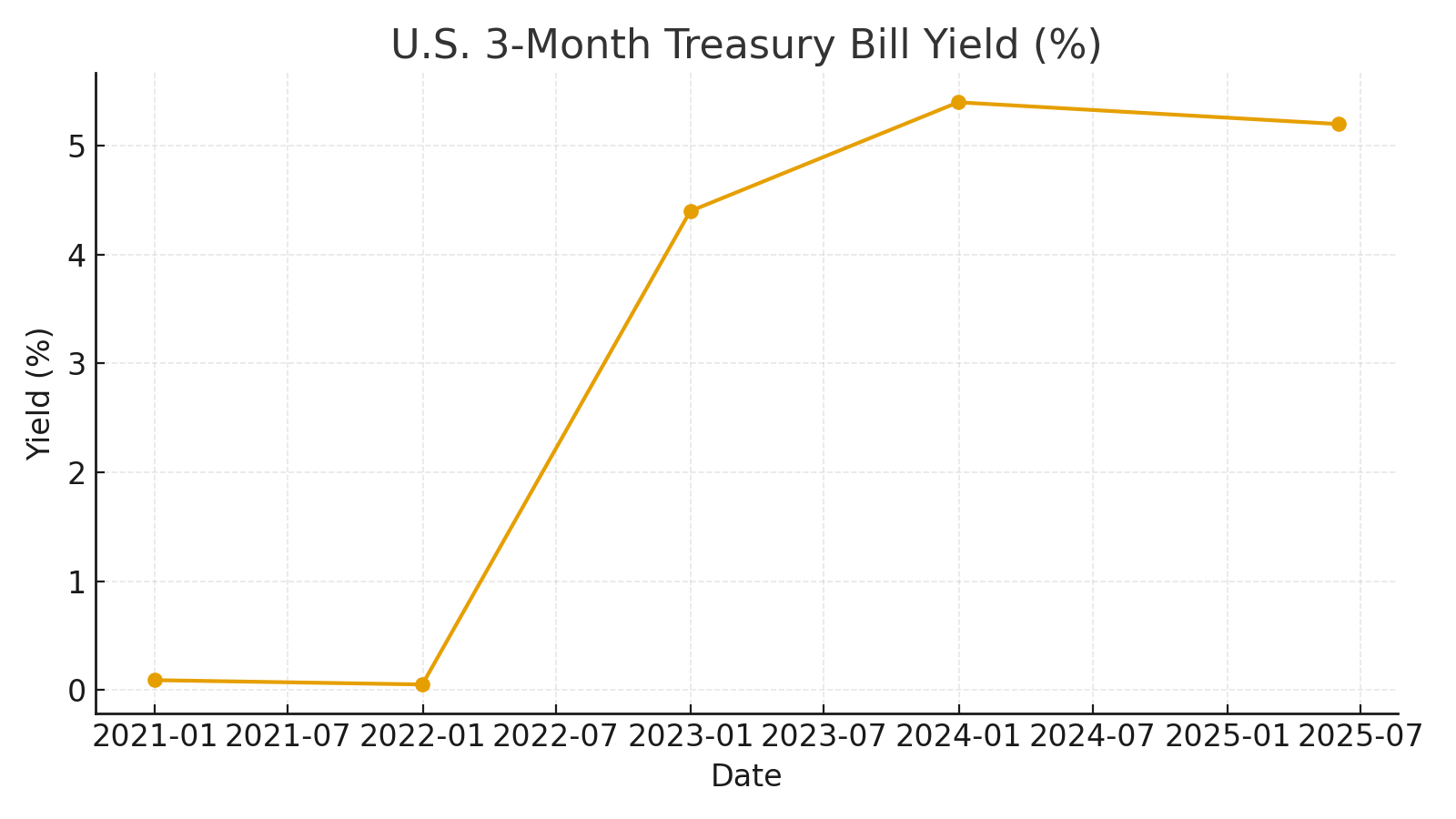

Reserve Transmission

Leading issuers hold the bulk of reserves in U.S. Treasury bills and cash. When EM deposits migrate into stablecoins, funding effectively re‑routes from local bank balance sheets to the U.S. Treasury via issuer reserve portfolios—tightening domestic credit conditions over time.

How EM deposits become U.S. T-bills

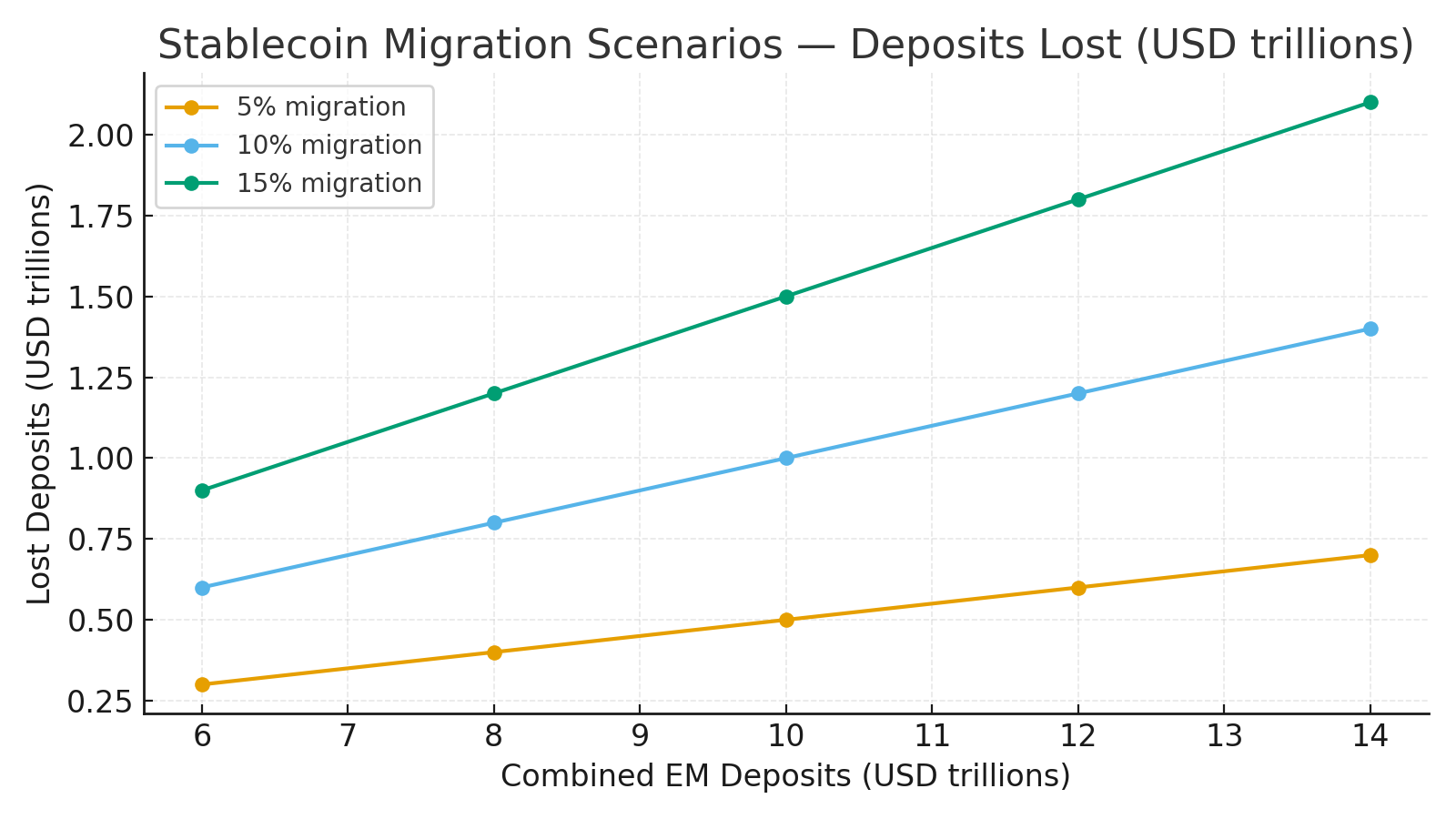

Let D denote the deposit base across a set of EM banking systems. If p% of D migrates to stablecoins, the mechanical outflow is:

Lost deposits: L = p × D

New U.S. T-bill demand: ~L × r, where r is the reserve share invested in T-bills (often very high).

Implication. A 10% migration from a $10T combined EM deposit base implies $1T leaving local banks and nearly that amount reappearing as demand for U.S. bills. Funding duration shortens and moves abroad; EM banks face higher marginal funding costs and tighter credit creation, especially for SMEs.

Asset Liability Management (ALM) angle. Banks with structural long-asset/short-funding profiles (mortgage/SME portfolios) are most exposed. Liquidity coverage ratios may look fine until retail FX hedging behavior becomes sticky through wallets.

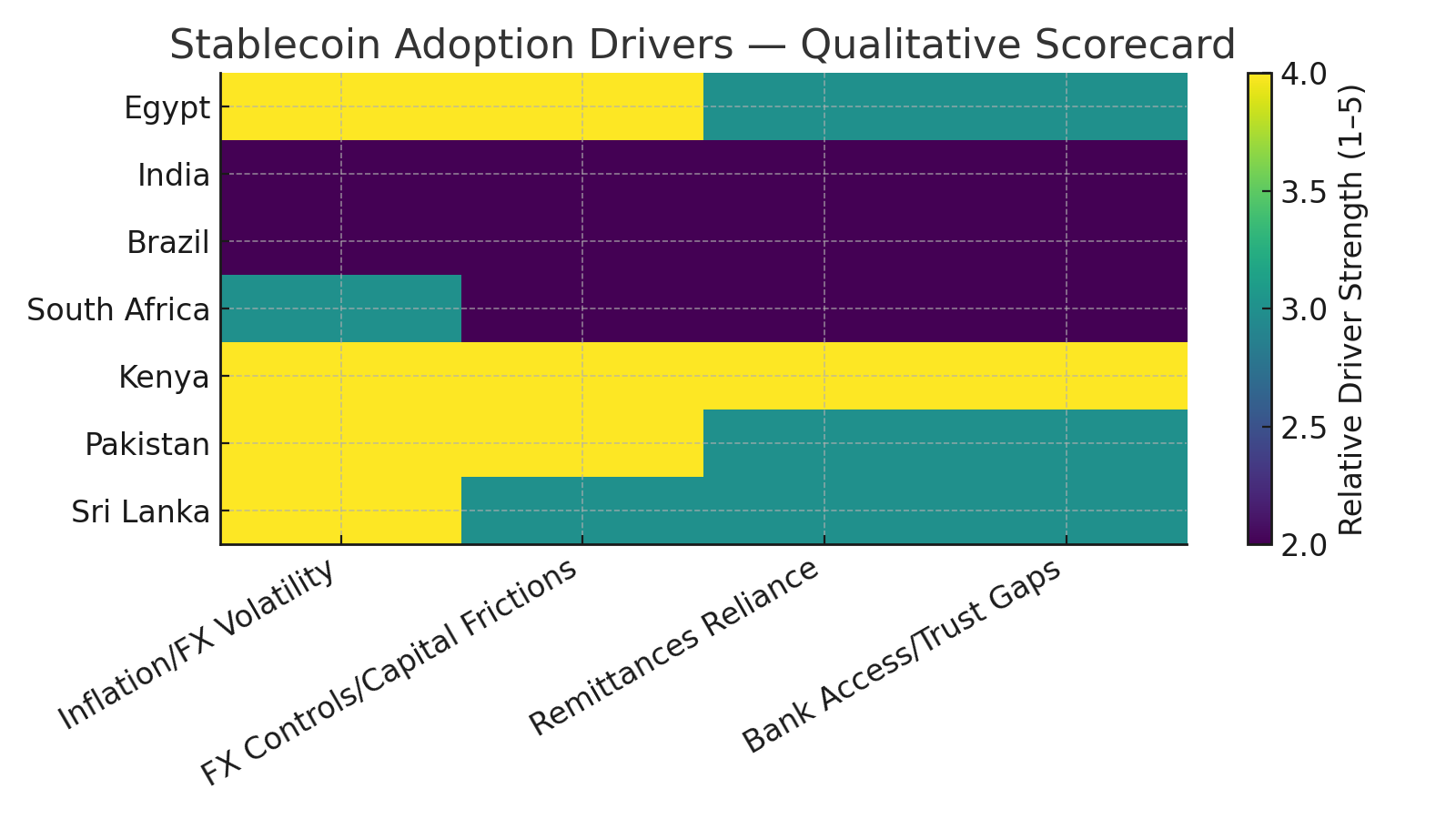

Adoption Drivers

Lower‑income corridors where inflation risk, Foreign Exchange (FX) frictions, and remittance flows are material show the highest stablecoin share of on‑chain activity. Households and SMEs prioritize return of capital over return on capital.

The user problem. In Cairo, Karachi, or Lagos, the first objective function is capital preservation. USD volatility is lower than local FX; convertibility risk sits with the bank, not the wallet.

The product fit. Stablecoins compress three frictions:

FX risk → peg to USD

Access/convertibility → 24/7 rails, no bank hours.

Compliance friction → Know Your Customer (KYC’d) on-/off-ramps, but simpler than cross-border wire regimes.

Remittance gravity. Family remittances + contractor income (freelance services, e-commerce) naturally dollarize on-chain. Once dollars arrive as tokens, they tend to stay in token unless there’s a compelling local alternative.

User Experience (UX) lock-in. Wallets now mimic banking apps: balances, transfer memos, QR pay, card on-ramps. Once households think in USD for savings, reversing that habit requires credible inflation-control and positive real deposit rates—a high bar.

Scenario Lens

If the combined deposit base across a representative EM set is $6–14T, then 5–15% migration to stablecoins implies $0.3–2.1T of deposits lost to local banks. The practical consequence is higher loan spreads and rationing for SMEs most reliant on bank intermediation.

Portfolio Angle

Treat stablecoins as operational cash and a settlement layer. For excess‑return math, continue to use 3‑month U.S. T‑bills as the risk‑free proxy. Position sizing and custody must reflect the tail risk of regulatory fragmentation affecting convertibility and liquidity.

Notes: Figures compile representative public point estimates and stylized scenarios; see text for interpretation and limitations.

The $1 Trillion Leak: How Stablecoins Threaten Emerging Market Banks

By Xinyi Ruan — The Beryl Consulting Group

A trillion dollars does not need a crisis to disappear; it only needs a stablecoin.

At last week’s Digital Assets conference in New York, strategists warned that up to one trillion dollars in deposits could quietly exit emerging market banks within the next three years. The potential outflow would not come from sanctions, defaults, or liquidity shocks, but from the accelerating use of stablecoins.

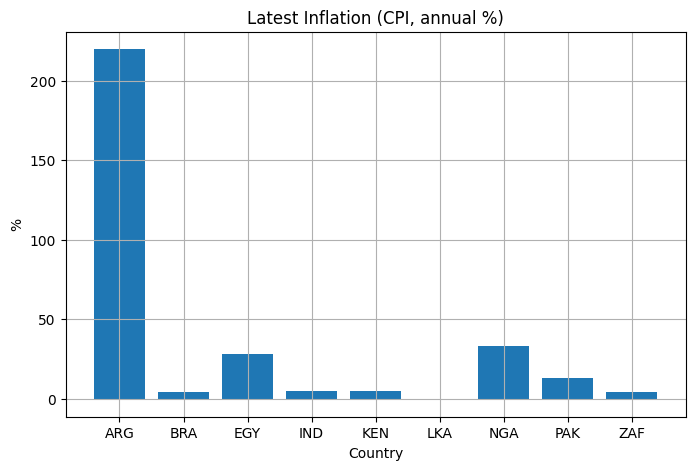

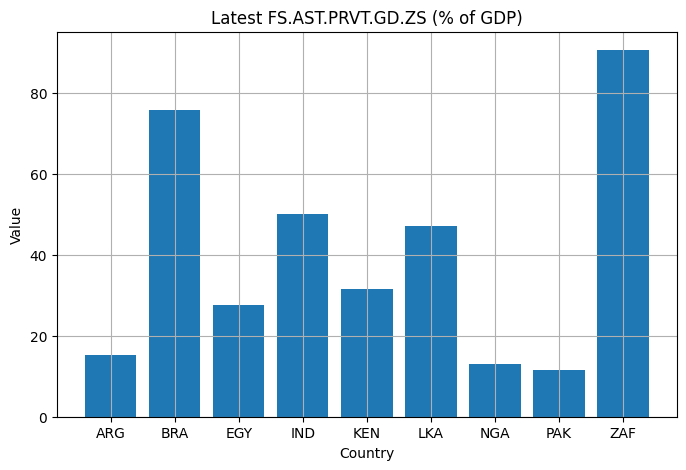

In countries such as Egypt, India, Brazil, South Africa, Kenya, Pakistan, and Sri Lanka, households are finding that a digital dollar on a smartphone feels safer than a balance in a domestic bank account. What began as a niche experiment within cryptocurrency markets has evolved into a macroeconomic phenomenon: a silent and sustained digital bank run.

Nearly 99 percent of all stablecoins are pegged to the United States dollar, and more than 70 percent of that market is dominated by two issuers, Tether (USDT) and USD Coin (USDC). Together they represent a combined market capitalization of approximately 165 billion dollars as of October 2025, according to CoinMarketCap. In many developing economies, these digital tokens function as borderless dollar accounts with no paperwork, no capital controls, and no inflation erosion. A small merchant in Lagos or a freelancer in Karachi can store and transfer digital dollars instantly, at any hour, without the friction of local banking systems.

In markets where domestic currencies have lost more than half their value in the past decade, the attraction is clear. For savers who have lived through repeated devaluations, the question is not how much they can earn, but how much they can keep.

Trust, rather than yield, has become the rarest asset. The International Monetary Fund’s 2025 forecast places annual inflation above 30 percent in Egypt, nearly 29 percent in Nigeria, and around 23 percent in Pakistan. According to the World Bank, emerging market bank deposits currently total about 9.5 trillion dollars, a massive pool of liquidity that is increasingly vulnerable to digital flight. If even ten percent of these deposits were converted into stablecoins, the resulting reallocation would reach one trillion dollars, roughly equivalent to erasing the combined deposit base of several mid-sized banking systems.

Evidence of this migration is already visible. Chainalysis reports that stablecoin transaction volumes in emerging markets have increased by more than 230 percent since 2021, a rate far exceeding that of developed economies. The so-called “crypto winter” that dampened enthusiasm in advanced markets never truly reached the Global South. For millions of savers, stablecoins are not instruments of speculation; they are instruments of self-preservation.

The danger lies not in an immediate collapse of the banking system, but in a gradual erosion of liquidity and monetary sovereignty. As deposits leak into blockchain-based systems, local banks lose the funding base required to sustain lending. With less credit creation, domestic investment slows and central banks lose influence over monetary conditions. The IMF has described this process as “digital dollarization,” warning that it threatens to weaken the transmission of monetary policy. Each stablecoin wallet created in the developing world can be interpreted as a quiet vote of no confidence in local institutions.

If the 2008 financial crisis represented a sudden collapse of confidence, this moment represents a slow and steady decline in relevance. Banks may not implode overnight, but they are steadily receding from the center of global finance.

This dynamic also carries geopolitical implications. Although stablecoins operate outside traditional regulatory channels, their widespread use effectively extends the reach of the U.S. dollar. The American government does not issue these tokens, yet their dollar peg reinforces the currency’s dominance in global trade and savings. With the Trump administration’s new crypto-friendly policy framework accelerating digital dollar adoption, stablecoins are becoming part of a decentralized yet distinctly dollar-denominated financial infrastructure. In effect, they export American monetary influence to every smartphone wallet from Cairo to Bogotá, embedding soft power in code.

In a world where an algorithm can safeguard purchasing power more effectively than a national currency, the definition of a safe asset is shifting. This is not the end of banking but many analysts argue that it may mark the end of banking as the default institution of trust. Stablecoins might be achieving what many local banks could not: protecting savings, preserving liquidity, and restoring confidence.

The next phase of stablecoin growth will likely come not from traders in New York or London, but from small businesses in Nairobi, remittance workers in Dhaka, and families in Buenos Aires seeking stability beyond their borders. What appears to be a technological trend is in reality a structural migration of capital.

Governments are beginning to react. China’s digital yuan, Nigeria’s eNaira, and India’s digital rupee pilots are all designed to defend domestic monetary sovereignty against dollar-pegged digital tokens. Yet these efforts may not fully reverse the tide. Money is increasingly defined by networks rather than jurisdictions.

It appears that trillion dollars that could leave emerging market banks will re-emerge on-chain, circulating globally, denominated in dollars but governed by code. The next global reserve currency will not be printed. It will be tokenized.

The $7 Trillion Compute Buildout: What's Driving the Next Infrastructure Wave

Part II

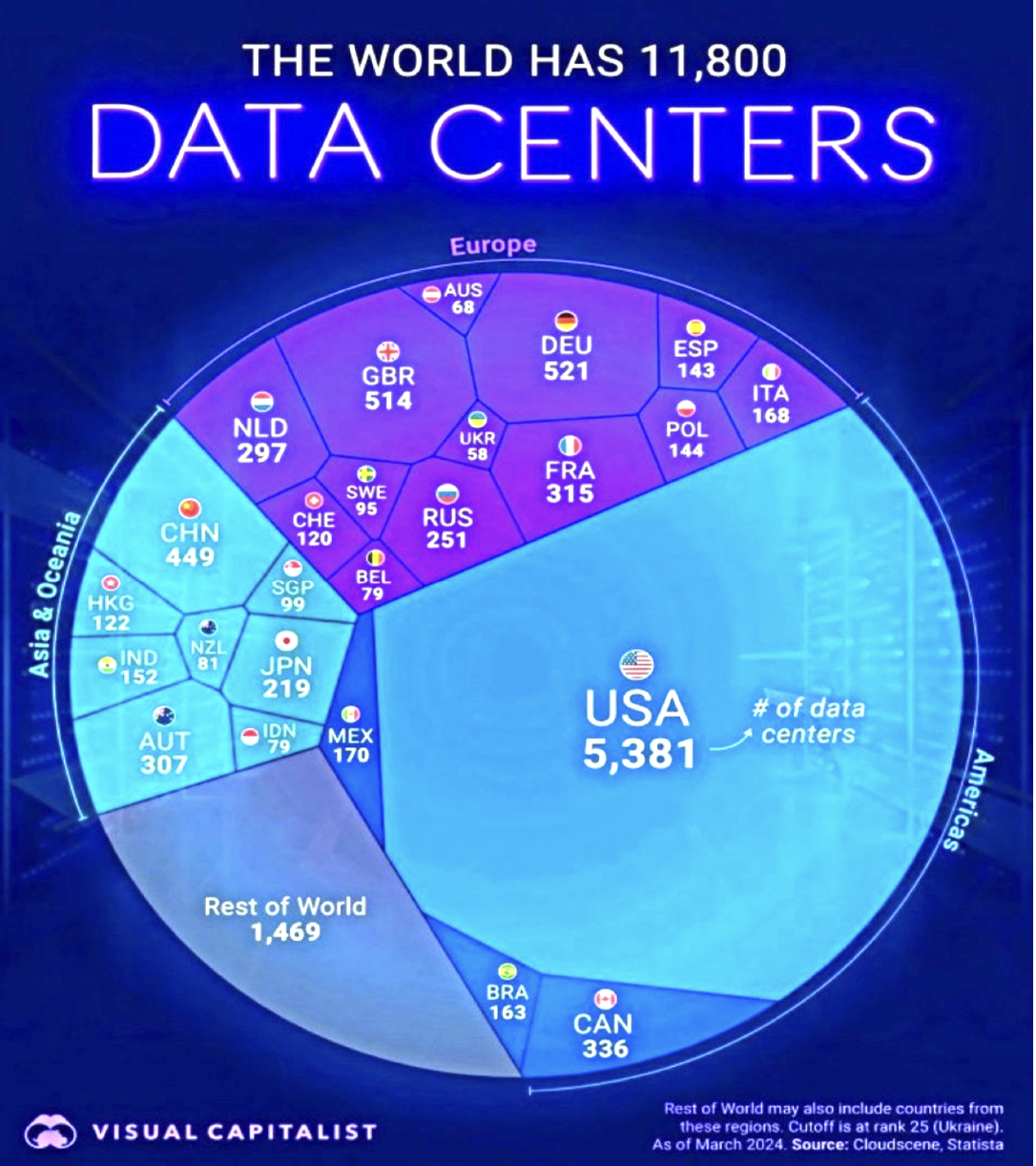

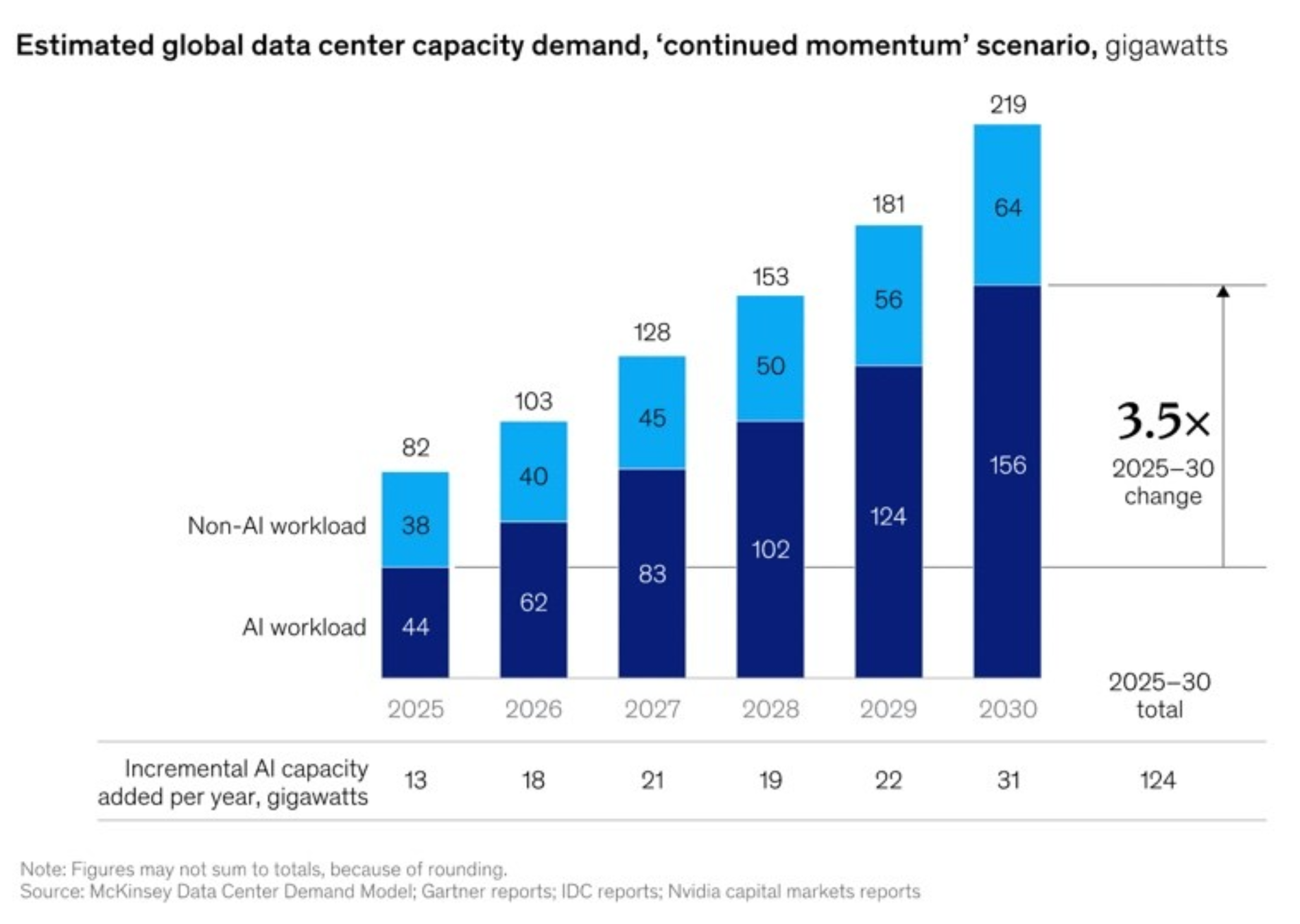

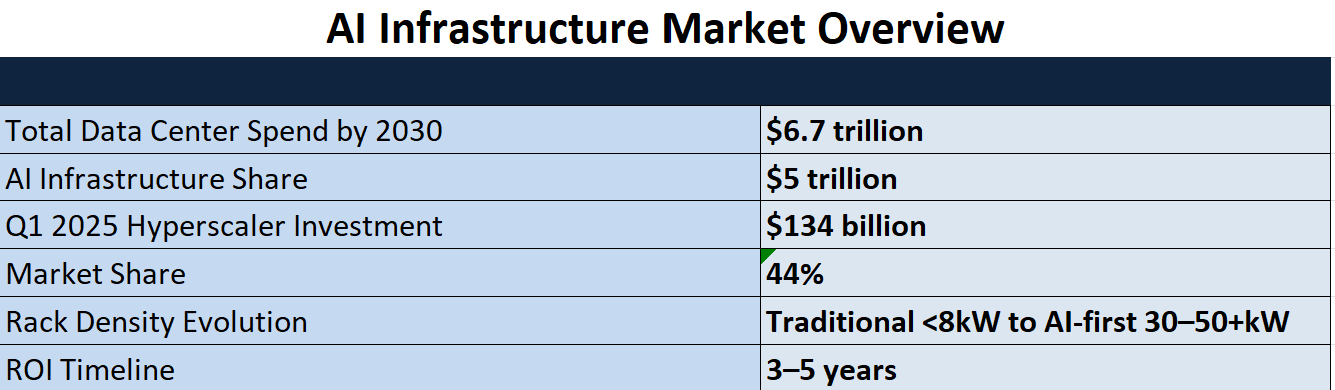

Previously, we discussed how the global artificial intelligence revolution is driving one of the largest infrastructure investments in human history. By 2030, total spending on data centers is projected to reach $6.7 trillion, with nearly $5 trillion directed toward AI-related infrastructure—facilities designed for compute-heavy workloads such as real-time analytics, large-scale AI training, and high-resolution video. CEO of Meta said last month that Meta would spend hundreds of billions of dollars to build several massive AI data centers for Superintelligence, intensifying his pursuit of a technology he has chased with a talent war for top engineers. This unprecedented expansion represents a fundamental transformation of digital infrastructure, reshaping power grids, cooling systems, supply chains, and regional economies. The scale of this buildout reflects not only technological advancement, but also a strategic imperative for nations and companies to secure their position in the emerging AI-driven economy. Building on this foundation, the following section turns to specific regional developments, highlighting America’s AI data center super-build and the Middle East’s emergence as a new hub for AI compute power.

Risk Factors and Mitigation Strategies

AI infrastructure investments face several significant risks that must be carefully managed. Technology evolution risk is substantial, as rapid advances in AI algorithms, chip architectures, and software frameworks can quickly obsolete existing infrastructure investments. Supply chain risks include component shortages, geopolitical tensions affecting semiconductor availability, and potential price volatility for critical components[58].

Demand uncertainty represents another risk factor, as AI adoption rates and application requirements remain difficult to predict accurately. Customer concentration risk, exemplified by CoreWeave's dependence on Microsoft for 62% of revenue, can create significant vulnerability to individual customer decisions[132].

Environmental and Efficiency Benefits & Innovative Cooling Solutions

The transition to AI-first infrastructure represents a fundamental shift in data center design philosophy. Traditional data centers were optimized for general computing workloads with relatively predictable power consumption patterns. AI workloads, however, demand sustained high-performance computing with minimal latency tolerance, driving rack densities to previously unimaginable levels.

AI-powered facilities require exponentially more electricity to run massive GPU clusters, train deep learning models, and deliver real-time inference. According to McKinsey research, data centers supporting AI could require up to 3x more power per square foot than traditional facilities, driven by the shift toward specialized hardware like GPUs and TPUs[54]. The expected average power from a DGX H100 server is approximately 10,200W, compared to traditional servers consuming 150-200W per chip[60].

This power density revolution is pushing cooling systems to their limits. AI data centers can generate extreme heat that standard air cooling cannot handle effectively. While air-based cooling alone uses up to 40% of a typical data center's electricity consumption, liquid cooling systems can support dense power racks on the order of 50-100 kW or more, with potential energy savings of up to 90% compared to air-based methods[57].

With high AI processing demand, new innovation and tech progress has been achieved especially with advanced cooling technologies, which deliver substantial environmental

benefits alongside performance improvements. Microsoft's life cycle assessment quantifies that advanced cooling methods, including cold plates and immersion cooling, can reduce greenhouse gas emissions by 15-21%, energy demand by 15-20%, and blue water consumption by 31-52% in data centers[102].

The power usage effectiveness (PUE) improvements from liquid cooling are substantial. While traditional air-cooled data centers typically achieve PUEs of 1.4-1.6, liquid-cooled facilities can reach PUEs below 1.2, representing 15-25% reduction in total facility energy consumption. Some two-phase immersion cooling systems can achieve PUEs as low as 1.02-1.05, approaching theoretical limits for cooling efficiency[115].

Water usage reduction is particularly important as AI data centers scale. Traditional air-based cooling with evaporative systems can require over 50 million gallons of water annually for a hyperscale facility. Closed-loop liquid cooling systems can reduce this consumption by 80-90%, while immersion cooling can eliminate water usage entirely in some configurations[57].

Supply Chain Constraints and Manufacturing Bottlenecks

The AI infrastructure expansion has revealed significant vulnerabilities in global supply chains for critical components. Three components are particularly constrained: high-bandwidth memory (HBM), high-performance GPUs, and advanced solid-state drives (SSDs). The convergence of surging AI demand, limited semiconductor capacity, and geopolitical tensions is creating unprecedented supply pressures[55].

NVIDIA's H100 and AMD's MI300 series GPUs experienced near-immediate sellouts across all production cycles in 2024. HBM3 memory, essential for AI accelerators, faces the most severe constraints with SK Hynix, Samsung, and Micron operating at full capacity while reporting six- to twelve-month lead times. This bottleneck is particularly problematic because HBM requirements per chip have reached all-time highs, with AI workloads forecasted to grow 25-35% year-over-year through 2027[55].

Advanced packaging represents another critical constraint. Chip-on-wafer-on-substrate (CoWoS) packaging components, essential for high-performance AI chips, would need to nearly triple production capacity by 2026 if data center demand for current-generation GPUs doubled[58]. Taiwan Semiconductor Manufacturing Company (TSMC), the dominant provider of advanced packaging services, is rapidly expanding capacity but faces lead times of 18-24 months for new facilities.

Not only that, trade tensions and export controls are significantly impacting AI infrastructure supply chains. The semiconductor industry's concentration in specific geographic regions—with Taiwan controlling 92% of advanced chip manufacturing and South Korea dominating memory production—creates substantial geopolitical risks[58].

Recent tariff discussions and technology export controls could further constrain supply availability and increase costs. Companies are responding by diversifying supply sources, signing long-term purchase agreements, and in some cases, making direct investments in manufacturing capacity. The "just-in-time" inventory strategy that dominated the past several decades is giving way to a "just-in-case" approach that's higher cost but more resilient[58].

Manufacturing Capacity Expansion

The industry is responding to supply constraints with massive capacity expansion investments. In some our previous articles, we mentioned the unprecedented growth USA had achieved. Leading foundries are building four to five additional bleeding-edge fabrication facilities costing $40-75 billion to meet projected demand growth[58]. However, these facilities require 3-5 years to become operational, creating a significant gap between demand acceleration and supply response, but it presents itself as a viable investment opportunity.

Memory manufacturers are also expanding production capacity, but the complexity of HBM manufacturing limits the number of suppliers that can achieve required quality and performance standards.

Geographic Expansion and Regional Development

The geographic distribution of AI data centers is increasingly driven by power availability, cooling efficiency, and regulatory environments rather than proximity to traditional technology hubs. While Northern Virginia remains dominant with its established infrastructure and connectivity, expansion is accelerating in regions with abundant renewable energy resources and favorable regulatory frameworks[56].

Texas has emerged as a major data center destination, attracting billions in hyperscaler investments due to its deregulated energy market, abundant renewable energy resources, and business-friendly policies. Houston and Austin are becoming significant hubs, with the University of Texas contributing technological expertise and talent development[56]. Similarly, Iowa's rural settings offer abundant land, lower operational costs, and access to wind energy, making it attractive for large-scale AI training facilities.

Internationally, data sovereignty requirements are driving localized infrastructure development. European markets are expanding rapidly to meet GDPR compliance needs and reduce latency for regional AI services. The Asia-Pacific region represents the fastest growth opportunity, with capacity expected to double within five years as countries like Indonesia, Malaysia, and Thailand develop their digital economies[59].

Infrastructure Readiness and Grid Integration

The massive power requirements of AI data centers—ranging from 100 MW to over 2 GW for planned hyperscale campuses—are straining regional electricity grids and requiring unprecedented coordination with utility providers. Some hyperscalers are exploring 50,000-acre data center campuses that could consume 5 GW, equivalent to powering several million homes[31].

This scale of demand is driving innovation in power generation and distribution. Data center operators are increasingly contracting directly with renewable energy providers, sometimes financing entire wind or solar farms to ensure adequate clean power supply. Microsoft, Google, and Amazon have all announced multi-gigawatt renewable energy purchase agreements specifically to support AI infrastructure expansion[84].

Grid stability concerns are prompting collaboration between data center operators and utility companies on demand response programs and grid services. Advanced battery storage systems and load balancing technologies are being integrated into data center designs to provide grid stabilization services while maintaining operational reliability[87].

Besides massive infrastructure needs, data centers create significant economic multiplier effects in local communities. Construction phases generate thousands of temporary jobs for skilled trades, while operational phases provide high-paying technical positions and supporting service jobs. Google's data center investments in Iowa exemplify this impact, creating thousands of construction jobs and hundreds of permanent technical positions while partnering with local schools for STEM education and IT training programs[85].

Tax revenue generation is substantial, with data centers typically paying significant property taxes while requiring minimal public services. However, communities are increasingly evaluating the trade-offs between economic benefits and resource consumption, particularly regarding electricity grid capacity and water usage for cooling systems[82].

Regulatory Frameworks and Policy Initiatives

The U.S. federal government has implemented comprehensive policy frameworks to accelerate AI infrastructure development. The Trump Administration's Executive Order 14318 "Accelerating Federal Permitting of Data Center Infrastructure" aims to streamline federal permitting for AI-centric data centers requiring over 100 MW of power, representing a shift toward federally coordinated, accelerated deployment[83].

This executive order directs agencies to ease environmental review processes under the National Environmental Policy Act (NEPA) and streamline permitting under the Clean Air Act, Clean Water Act, and other federal regulations. The government is also providing loans, loan guarantees, grants, tax incentives, and offtake agreements to financially support "Qualifying Projects" involving data center construction[86].

The Department of Defense is investigating military installations for competitive leasing to support AI objectives, while the Departments of Commerce, Interior, and Energy are coordinating with industry on authorizing suitable sites. This comprehensive approach reflects the federal government's recognition of AI infrastructure as critical to national security and economic competitiveness[83].

States are increasingly competing to attract AI infrastructure investments through targeted incentive packages and regulatory streamlining. Texas offers substantial tax incentives and expedited permitting processes, while maintaining its deregulated energy market that allows data center operators to negotiate directly with power providers. Virginia continues to leverage its established data center ecosystem and proximity to government customers.

However, the federal executive order includes provisions to discourage "burdensome AI regulations" by threatening to withhold federal AI funding from states with restrictive policies. This approach may drive states to weaken environmental regulations specifically for the AI industry, particularly those aligned with federal environmental acts[86].

International Regulatory Coordination

Globally, governments are recognizing AI infrastructure as strategically critical and implementing supportive policies. Countries including China, India, Singapore, and Malaysia offer tax incentives and subsidies for data center development, while Japan and South Korea are investing heavily in AI research and cloud infrastructure[59].

Data sovereignty requirements are shaping international expansion strategies, with numerous jurisdictions enacting laws requiring personal data to remain within national borders. This regulatory trend accelerates demand for locally situated data centers and influences hyperscaler deployment strategies across different regions.

Sustainability Initiatives and Environmental Considerations

The environmental impact of AI infrastructure has become a central concern as data centers are projected to consume 536 terawatt-hours (TWh) globally in 2025, representing about 2% of global electricity consumption[57]. Leading hyperscalers are responding with ambitious renewable energy commitments and innovative clean power integration strategies.

Google has partnered with Kairos Power to build small modular nuclear reactors specifically to meet the rising energy demands of AI data centers with carbon-free power[84]. Microsoft has committed to being carbon negative by 2030, requiring massive investments in renewable energy and carbon capture technologies. Amazon's Climate Pledge includes plans to achieve net-zero carbon emissions by 2040, with significant focus on clean power for data center operations.

These commitments are driving innovation in renewable energy deployment. Companies like Terabase Energy are utilizing AI and robotics to accelerate construction of large-scale solar farms, enhancing efficiency and reducing deployment time for renewable energy solutions[84]. The convergence of AI infrastructure demand and renewable energy expansion is creating new opportunities for integrated clean energy development.

Advanced carbon accounting systems are becoming standard for AI infrastructure operations. Tools like CodeCarbon enable developers to measure the carbon footprint of their code, promoting awareness and encouraging optimization to reduce emissions[84]. These measurement capabilities are essential for meeting corporate sustainability commitments and regulatory requirements.

AI is also being deployed to optimize energy consumption within data centers. AI-driven systems can reduce HVAC energy usage significantly through predictive optimization, thermal modeling, and dynamic load balancing[84]. Machine learning algorithms are being used to optimize cooling system operations, power distribution, and workload scheduling to minimize environmental impact.

Data center operators are naturally implementing circular economy principles to minimize resource consumption and waste generation. This includes designing facilities for component reuse, implementing heat recovery systems for district heating, and developing closed-loop water systems that minimize consumption[45].

Waste heat recovery is becoming increasingly sophisticated, with some data centers providing heat for nearby buildings, greenhouses, or industrial processes. NorthC's upcoming waste heat project in Rotterdam Zestienhoven exemplifies this approach, turning waste heat into a community resource[112].

Conclusion

The $7 trillion AI infrastructure buildout represents one of the most significant technological and economic transformations in modern history. This unprecedented expansion is reshaping not only the technology industry but also energy systems, supply chains, and regional economies worldwide. The scale and urgency of this investment reflect the strategic importance of AI infrastructure for national competitiveness, corporate success, and technological leadership.

The convergence of multiple factors—exploding AI demand, compressed ROI timelines, supply chain constraints, and geopolitical competition—is creating both tremendous opportunities and significant risks. Success in this environment requires sophisticated understanding of technology trends, market dynamics, and strategic positioning across the entire infrastructure value chain.

As we look toward 2030, the companies, regions, and nations that successfully navigate this infrastructure transformation will secure decisive advantages in the AI-driven economy. The buildout is not just about providing computational capacity; it's about enabling the next generation of human productivity, scientific discovery, and economic growth. The stakes could not be higher, and the race is well underway.

The $7 Trillion Compute Buildout: What's Driving the Next Infrastructure Wave

Part I

The global artificial intelligence revolution is driving one of the largest infrastructure investments in human history. By 2030, total spending on data centers is projected to hit $6.7 trillion, with nearly $5 trillion directed toward AI-related infrastructure—facilities built to handle compute-heavy workloads like real-time analytics, large-scale AI training, and high-resolution video[25]. This unprecedented expansion represents a fundamental transformation of digital infrastructure, reshaping everything from power grids and cooling systems to supply chains and regional economies. The scale of this buildout reflects not just technological advancement, but a strategic imperative for nations and companies to secure their position in the emerging AI-driven economy.

The Scale and Urgency of AI Infrastructure Expansion

The artificial intelligence infrastructure market is experiencing explosive growth at an unprecedented pace. According to IDC research, spending on AI infrastructure reached $47.4 billion in the first half of 2024, representing a 97% year-over-year increase[24]. This trajectory positions the market to exceed $200 billion by 2028, with Dell'Oro Group projecting that worldwide data center capital expenditures will surpass $1 trillion annually by 2029[24].

The velocity of this expansion is being driven by multiple converging factors. Enterprise AI adoption is accelerating rapidly, with Gartner predicting that by 2027, more than 50% of the generative AI models used by enterprises will be specific to either an industry or business function—up from approximately 1% in 2023[130]. This shift from general-purpose to specialized AI applications is creating unprecedented demand for dedicated computing infrastructure.

Hyperscale cloud providers are leading this investment surge. Microsoft has announced plans to invest $80 billion in fiscal 2025 specifically for AI-enabled data centers, with over half targeted for U.S. infrastructure—nearly double their total capital expenditure from just four years ago[22]. Amazon, Google, and Meta have made similarly massive commitments, with the top four hyperscalers accounting for 44% of Q1 2025 data center capital investments totaling $134 billion[28].

The AI infrastructure buildout is not limited to traditional technology hubs. Data centers are increasingly being deployed in diverse geographic locations to optimize for power availability, cooling efficiency, and regulatory environments. Northern Virginia continues to dominate as "Data Center Alley," but significant expansion is occurring in Texas, Iowa, and other regions with abundant renewable energy resources[56].

Internationally, the Asia-Pacific region is experiencing particularly rapid growth. Bank of America predicts that data center capacity in the Asia-Pacific region will double within the next five years, representing an additional 2 GW of capacity added annually—double the growth rate of the previous five-year period[59]. Countries including Japan, South Korea, Indonesia, Malaysia, Thailand, and the Philippines are emerging as key markets, driven by increasing demand for localized AI services and data sovereignty requirements.

Economic Multiplier Effects and Industry Transformation

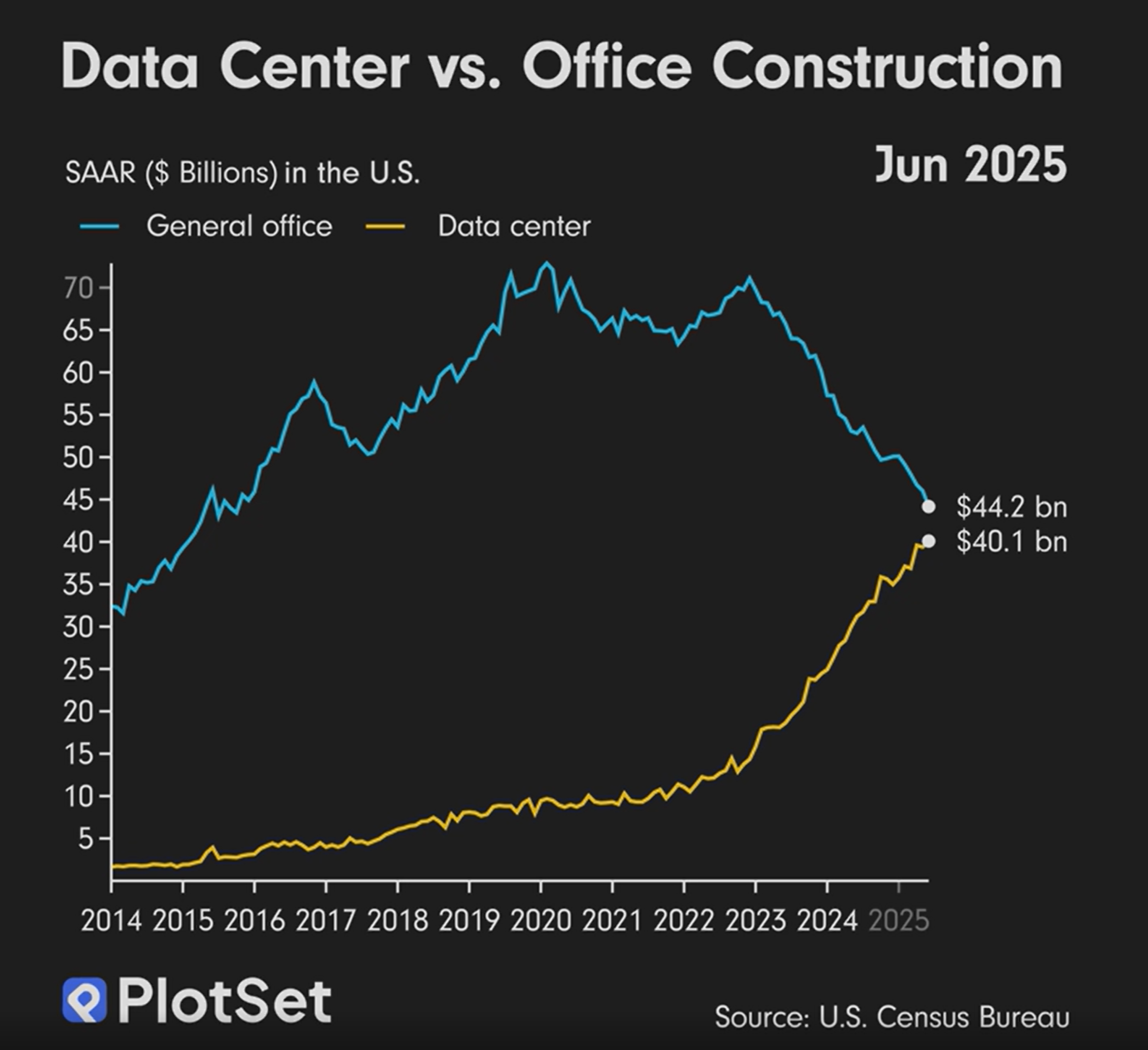

The AI infrastructure expansion is creating significant economic multiplier effects across multiple sectors. According to McKinsey analysis, investment in data centers can drive GDP growth, create thousands of high-paying jobs, and spur innovation across various sectors. Virginia alone generated approximately $31 billion in supported economic output from data center construction and operations in 2023[88]. Additionally, according to the U.S. Census Bureau, Data center construction is set to surpass General office construction in 2025, which sets to revolutionize the paradigm.

The construction phase of a typical large data center (around 250,000 square feet) can add up to 1,500 workers on-site, requiring crews of site developers, equipment operators, construction workers, electricians, and technicians, many earning wages upward of $100,000 per year. For steady-state operations, more than 50 permanent jobs are created, and for every job inside a data center, an estimated 3.5 additional jobs are created in the surrounding economy[88].

Unlike traditional cloud or enterprise facilities, which typically operate under 8 kW per rack, AI-ready builds are rapidly pushing toward 30–50 kW rack densities. This dramatic escalation is confirmed in Uptime's 2024 Global Data Center Survey, where operators report a growing urgency to upgrade legacy facilities or construct new high-density ones designed explicitly for AI clusters (Donnellan, 2024).

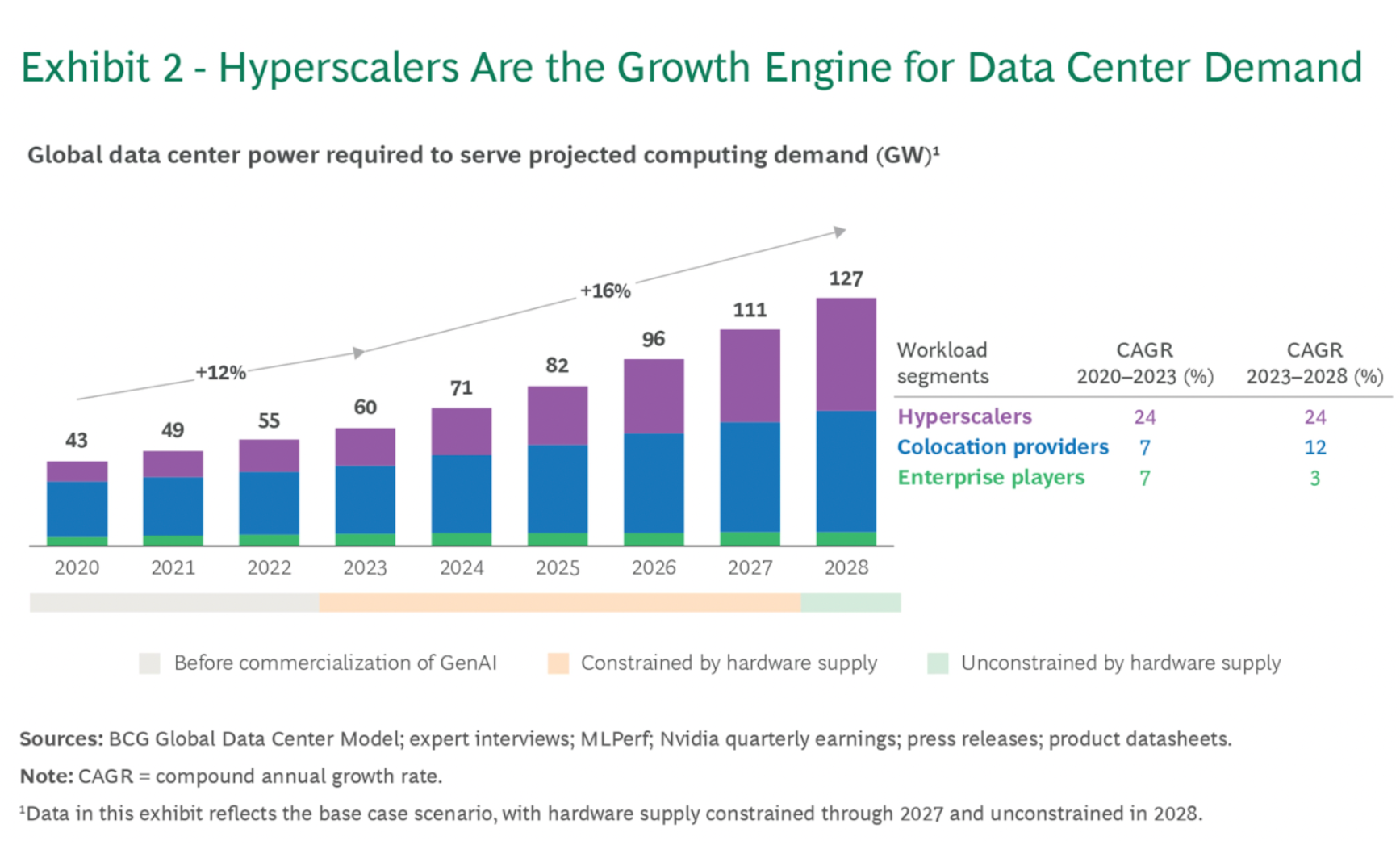

To help visualize the idea, the graph above helps contextualize the shift. While hyperscalers remain the primary engine of demand, colocation providers and enterprise players—often reliant on third-party capital or partnerships to secure land, power, and compute infrastructure—are accelerating their roles in meeting AI infrastructure needs (Global Data Center Market Report 2025). These segments, frequently backed by REITs, private equity firms, and energy-focused funds, now account for a growing share of global capacity. Their emergence not only diversifies who operates infrastructure but also who finances it—underscoring how capital investment is rapidly expanding beyond the big four hyperscalers.

Economic Impact: Capital Intensity and Accelerated ROI

Meanwhile, Deloitte notes that the ROI timeline for AI infrastructure has compressed to 3–5 years, driven by soaring demand and faster monetization cycles. Many companies now design AI infrastructure with revenue generation in mind from day one, accelerating value realization (Can US infrastructure keep up with the AI economy?).

The economics of AI infrastructure investment have fundamentally changed compared to traditional data center deployments. While capital expenditures have increased dramatically—with hyperscalers expected to increase year-over-year spending by 44% to $371 billion in 2025—the revenue potential and strategic value have grown proportionally[31].

AI infrastructure investments are increasingly evaluated using comprehensive ROI frameworks that account for both tangible and intangible benefits. Tangible benefits include direct cost savings from automation, increased processing efficiency, and reduced operational expenses. A study in healthcare showed that AI-powered diagnostic platforms could achieve 451% ROI over five years, increasing to 791% when radiologist time savings were included[130].

Intangible benefits encompass improved competitive positioning, enhanced innovation capability, and strategic optionality for future AI applications. The rapid evolution of AI applications means that infrastructure investments today may enable entirely new revenue streams that don't yet exist, making traditional payback calculations insufficient for capturing full economic value.

Investment Analysis and Financial Projections

The global data center market was valued at $347.60 billion in 2024 and is projected to reach $652.01 billion by 2030, representing a compound annual growth rate of 11.2%[138]. However, AI-specific infrastructure is growing significantly faster, with some segments experiencing growth rates exceeding 30% annually.

Synergy Research Group projects that hyperscale data center capacity will triple by 2030, driven primarily by generative AI technology requirements[131]. The average capacity of hyperscale data centers to be opened over the next four years will be almost double that of current operational facilities, reflecting the power-intensive nature of AI workloads.

AI infrastructure investments are demonstrating strong ROI metrics when properly implemented and measured. Healthcare applications have shown particularly strong returns, with AI-powered diagnostic platforms achieving 451% ROI over five years, increasing to 791% when time savings are included[130]. However, ROI is highly sensitive to implementation factors, application types, and measurement timeframes.

The compressed ROI timeline of 3-5 years for AI infrastructure reflects several factors: premium pricing for specialized AI services, improved operational efficiency through automation, and strategic value from enabling new business models and revenue streams. However, continuous performance monitoring is essential as AI model performance may degrade over time without proper maintenance[130].

Emergence of AI-Native Infrastructure Providers

The specialized requirements of AI workloads have created opportunities for new categories of infrastructure providers. Companies like CoreWeave exemplify this trend, having evolved from cryptocurrency mining to become a dedicated AI cloud infrastructure provider. CoreWeave operates more than 250,000 NVIDIA GPUs across 32 data centers, with revenue growing 737% year-over-year to $1.9 billion in 2024[129].

These "neocloud" providers focus exclusively on delivering GPU-as-a-Service solutions optimized for artificial intelligence applications. Their specialized approach allows them to achieve superior performance metrics—CoreWeave's MLPerf benchmark shows their NVIDIA H100 Tensor Core GPU cluster can complete training in 11 minutes, 29 times faster than the nearest competitor, while internal tests demonstrate up to 20% better system efficiency[132].

The world's largest hyperscalers are pursuing different strategies to meet AI infrastructure demands. Microsoft leads with its comprehensive Azure AI infrastructure, supporting both internal developments like Copilot and partnerships with OpenAI. The company's $80 billion investment plan includes modular construction approaches for rapid deployment and strategic placement in regions with favorable energy policies[23].

Amazon Web Services continues expanding despite recent project reassessments, focusing on integrated solutions that combine AI training and inference capabilities. Google's approach emphasizes custom TPU development alongside NVIDIA GPU deployments, while Meta concentrates on facilities specifically designed for large language model training and inference workloads requiring sustained, high-density computing power[22].

Market Competition and Industry Consolidation (Traditional Data Center REIT Evolution)

Established data center REITs are rapidly adapting their business models to serve high-density AI workloads. Equinix, with 22 years of consecutive quarterly revenue growth and targeting $9 billion in annual revenue, has logged more than 400 MW of hyperscale capacity through its xScale joint venture while maintaining its premium retail colocation franchise[129].

Digital Realty has pivoted toward a full-spectrum model combining hyperscale campuses with high-density colocation. Recent land acquisitions in Texas and North Carolina are designed for 100MW+ scale developments, with the company signing 166 new customers in Q4 2024 alone. Both companies are investing heavily in liquid cooling systems, GPU-ready pods, and modular expansion capabilities to serve AI-native tenants[129].

Iron Mountain exemplifies the financial discipline shaping the market, growing data center revenue 25% in 2024 while selectively choosing hyperscale deals. The company passed on a 130MW lease that didn't meet return on investment thresholds, demonstrating how operators are prioritizing profitability over pure growth[129].

The emergence of AI-native infrastructure companies represents a significant market development. CoreWeave's transformation from cryptocurrency mining to AI cloud infrastructure, achieving 737% year-over-year revenue growth to $1.9 billion in 2024, demonstrates the commercial potential of specialized positioning[129].

CoreWeave operates more than 250,000 NVIDIA GPUs across 32 data centers, with Microsoft alone accounting for 62% of revenue. The company is targeting a $32-35 billion initial public offering and has raised more than $12 billion in debt and equity funding for expansion. However, this model carries significant risks including high debt levels, customer concentration, and dependence on continued AI demand growth[132].

Supply Chain Economics and Market Dynamics

The AI infrastructure boom has created unprecedented demand-supply imbalances across critical components. High-bandwidth memory (HBM3), specialized GPUs, and advanced packaging components face six- to twelve-month lead times amid capacity constraints[55]. SK Hynix, Samsung, and Micron—the three dominant HBM producers—are operating near full capacity, creating bottlenecks that could limit overall infrastructure deployment speed.

These supply constraints are driving strategic partnerships and vertical integration efforts. Hyperscalers are signing long-term supply agreements and making direct investments in semiconductor capacity to secure access to critical components. The semiconductor supply chain is so complex that a demand increase of about 20% or more has a high likelihood of causing shortages, and the AI explosion could easily surpass that threshold[58].

As for revenue generation, the compressed ROI timelines reflect sophisticated monetization strategies that begin generating revenue from day one of operations. Unlike traditional infrastructure investments with lengthy payback periods, AI infrastructure is increasingly designed with immediate revenue generation capabilities through:

Infrastructure-as-a-Service models where specialized GPU resources command premium pricing for AI training and inference workloads. CoreWeave's success demonstrates this approach, with gross margins significantly higher than traditional cloud services due to the specialized nature of AI workloads[132].

Edge inference deployment enabling real-time AI applications with strict latency requirements. This distributed approach allows infrastructure providers to capture value from proximity to end users while reducing bandwidth costs for hyperscale providers[113].

Hybrid training and inference architectures that maximize utilization by supporting both compute-intensive training workloads during off-peak hours and latency-sensitive inference during peak demand periods.

International Competition and Strategic Positioning

Global competition in AI infrastructure is intensifying, with international players pursuing different strategies. Huawei's CloudMatrix 384, powered by Ascend 910C chips, represents China's answer to NVIDIA's GB200 platform, though it remains less efficient per watt[129]. This demonstrates that the global AI infrastructure race is not constrained to Western companies and involves significant geopolitical competition.

Segro, the UK REIT known for logistics, has entered the data center space through a £1 billion joint venture with Pure Data Centres, designing fully fitted facilities for AI workloads pre-let to hyperscalers[129]. This international expansion reflects growing recognition that AI infrastructure represents a critical competitive advantage for national economies.

In this first part, we’ve explored the explosive growth in AI infrastructure spending, Hyperscalers, its economic effects and rising global AI competition and geopolitical positioning. In Part 2, we’ll dive into challenges, risks, and regional strategies of the $7 trillion AI infrastructure buildout — stay tuned, it will be published soon.

AI's Original Sin: Copyright Confusion at the Core of Machine Learning

In boardrooms from New York to Silicon Valley, a troubling pattern emerges whenever executives discuss their AI strategies. The conversation inevitably stalls on one fundamental question: "What data is actually in our models?" This uncertainty represents more than a technical curiosity as it's become the defining business challenge of the AI era.

Recent research reveals the scope of this challenge. MIT's Data Provenance Initiative systematically audited 1,800 public AI training datasets, discovering that 68% lack proper licensing documentation while 50% mischaracterize their contents entirely. For business leaders, this translates into what risk management professionals recognize as "unknown unknowns", exposures they cannot quantify, manage, or price effectively.

This data opacity crisis demands strategic attention from professionals and enterprise executives alike. While the technical capabilities of artificial intelligence continue to advance rapidly, the fundamental question of training data provenance threatens to undermine long-term AI adoption across industries.

Understanding the Business Impact

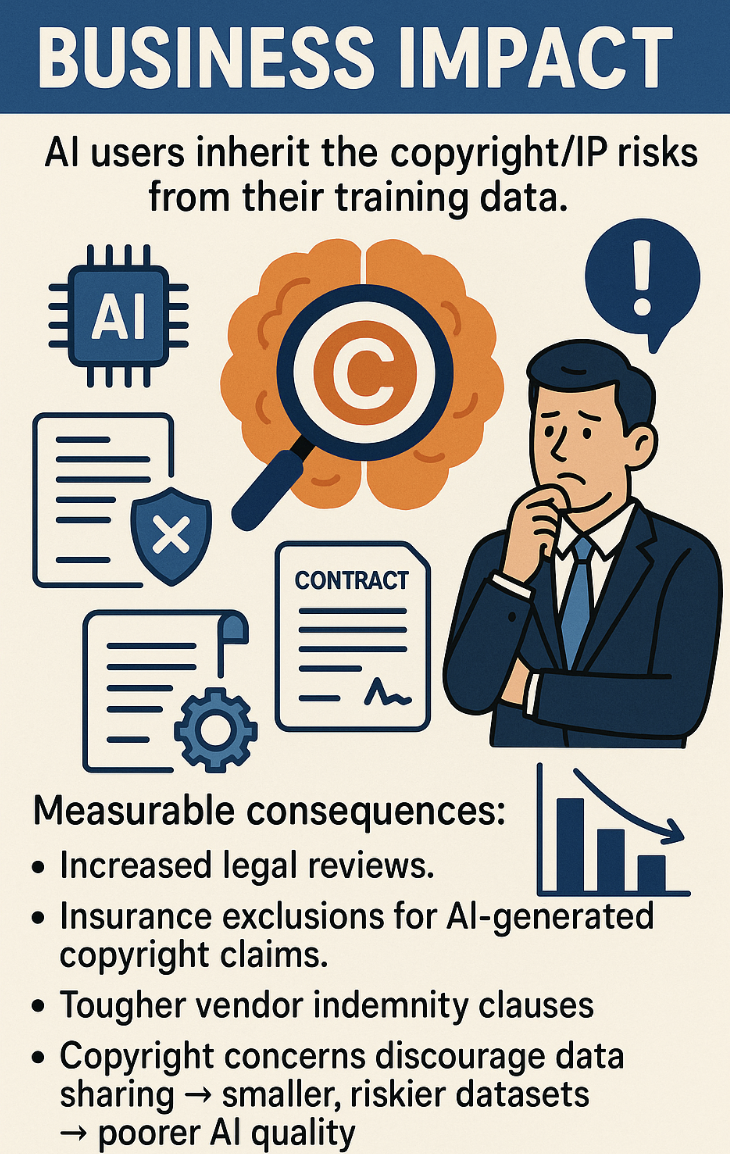

The challenge extends far beyond legal compliance. Organizations deploying AI systems inherit the copyright and intellectual property risks embedded in their training data, creating liability concerns that traditional risk frameworks struggle to address. When companies use AI tools for content creation, customer service, or strategic analysis, they potentially expose themselves to copyright infringement claims based on data decisions made by third-party AI developers.

This uncertainty manifests in measurable business costs. Corporate legal departments report significant increases in AI-related risk assessments, while insurance companies exclude AI-generated copyright claims from standard policies. Procurement teams now demand extensive indemnification clauses from AI vendors, protections that may prove inadequate given the scale of potential exposure.

Perhaps most significantly, the uncertainty creates a negative feedback loop that limits AI model quality. Enterprise executives report that copyright concerns discourage data sharing initiatives, reducing the diversity and quality of training datasets while paradoxically increasing legal risk through greater reliance on potentially problematic public datasets.

The Technical Root of the Problem

Unlike traditional software where dependencies can be explicitly managed through version control systems, machine learning models embed training data in ways that make retrospective analysis extraordinarily difficult. During training, neural networks process millions of data points through multiple transformation layers, creating emergent representations that combine elements from the entire training corpus.

This fundamental architecture makes it virtually impossible to determine whether specific copyrighted content influenced model outputs without comprehensive provenance tracking from the initial development stages. The computational efficiency that makes large language models powerful also makes them opaque to post-hoc analysis.

Federal research agencies have begun documenting this technical challenge systematically. The National Institute of Standards and Technology (NIST) AI Risk Management Framework requires "end-to-end lineage evidence" that traces data from initial collection through all processing stages to final model deployment. Current industry practices fall substantially short of these requirements, creating compliance gaps that extend across the enterprise AI landscape.

Emerging Legal Landscape

The legal environment surrounding AI training data continues to evolve rapidly, creating additional uncertainty for business planning. Recent court decisions have produced mixed results that offer little clarity for enterprise risk assessment. Some courts have found that AI training constitutes fair use under copyright law, particularly when the training process is "exceedingly transformative" and when companies have legally purchased training content.

However, other decisions have reached opposite conclusions, particularly when AI systems compete directly with the original content creators. The Delaware federal court's decision in Thomson Reuters v. Ross Intelligence found that AI legal research tools' use of copyrighted legal databases was not fair use because it created competing products serving the same market.

These cases highlight the fundamental uncertainty facing enterprise AI adoption. Organizations cannot reliably predict whether their AI implementations will face successful copyright challenges, making strategic planning and risk management extraordinarily difficult.

Strategic Solutions for Enterprise Leaders

Despite these challenges, practical solutions are emerging that enable organizations to implement AI systems while managing copyright and provenance risks effectively. Leading enterprises are developing comprehensive data governance frameworks that combine technical solutions with organizational processes to create verifiable audit trails throughout the AI development lifecycle.

Automated Provenance Tracking represents the foundation of effective solutions. Modern systems use blockchain-based technologies to create immutable records of all data transformations throughout AI development pipelines. Each processing step, from initial collection through preprocessing, training, and deployment - generates cryptographically signed entries that provide tamper-evident documentation while enabling collaborative development across multiple organizations.

Source-Labeling Systems embed machine-readable metadata directly into training data using emerging standards like C2PA (Coalition for Content Provenance and Authenticity). These persistent identifiers specify data origins, ownership rights, and usage permissions, enabling automated processing systems to make real-time decisions about data usage compliance.

Automated Copyright Detection provides practical tools for implementing real-time content filtering during model training. Advanced systems maintain comprehensive databases of known copyrighted content, comparing training data against these references using neural networks trained specifically for copyright detection, achieving accuracy rates exceeding 95% for text, image, and audio content.

Building Competitive Advantage Through Compliance

Organizations implementing comprehensive data governance systems report significant advantages beyond mere compliance benefits. Systematic provenance tracking improves model performance through better data quality management, reduces development costs through automated compliance checking, and enhances competitive positioning through demonstrated ethical AI practices.

Early research suggests that proactive provenance implementation reduces total development costs by 15-30% compared to reactive compliance approaches. These savings result from decreased legal review requirements, reduced model retraining needs, and streamlined partnership negotiations with data providers.

Market analysis indicates that provenance-verified AI systems command premium pricing in enterprise markets, where customers increasingly prioritize legal and ethical compliance in vendor selection decisions. This trend suggests that comprehensive data governance may become a significant competitive differentiator rather than merely a regulatory burden.

Implementation Roadmap for Organizations

Successful implementation requires a systematic approach that addresses both immediate compliance needs and long-term sustainability requirements. Organizations should begin with comprehensive data asset inventories that identify all training datasets, their sources, licensing terms, and current usage patterns.

The technical infrastructure should combine blockchain-based provenance tracking with automated copyright detection and privacy-preserving training methodologies. This foundation provides the computational capabilities necessary for comprehensive data governance at enterprise scale.

Governance processes must integrate standardized documentation frameworks into all AI development workflows. Dataset cards and model cards provide structured formats for recording comprehensive provenance information, while version control systems track changes to both datasets and documentation throughout the development process.

Third-party auditing protocols enable independent verification of provenance claims through standardized assessment methodologies developed by organizations like NIST and academic research institutions. These protocols provide objective frameworks for evaluating data governance practices without requiring access to proprietary information.

Strategic Recommendations

Data provenance should be treated as a foundational requirement rather than an afterthought. Organizations that proactively address these challenges will be positioned to capture AI's competitive advantages while avoiding the legal and reputational risks that threaten reactive adopters.

The investment in comprehensive data governance systems pays dividends beyond compliance. Organizations with robust provenance tracking report improved stakeholder confidence, enhanced partnership opportunities with content creators, and differentiated market positioning based on ethical AI practices.

The regulatory environment will continue tightening, with agencies moving toward mandatory transparency requirements and comprehensive disclosure mandates. Organizations that invest in governance infrastructure now will avoid the disruption and higher costs associated with retrofitting existing AI systems to meet future regulatory requirements.

The Path Forward

The resolution of AI's data challenges requires treating this as fundamentally a business strategy issue rather than purely a technical or legal problem. Organizations that implement systematic solutions based on proven technologies and comprehensive governance frameworks can establish sustainable competitive advantages while supporting continued innovation.